Ever had that magical moment where your phone or computer seems to read your mind, instantly pulling up exactly what you need? You tap an app, and it just opens. You revisit a webpage, and it appears in the blink of an eye. While it might feel like telepathy, behind the scenes, a clever component is working hard to make your digital life feel incredibly snappy. This unsung hero? It’s often the magic of Cache Memory.

To truly grasp its brilliance, let’s use a simple picture. Imagine your device’s main storage – that’s your hard drive or SSD – as a vast, sprawling library. It holds absolutely everything, but finding a specific book (or piece of data) can take a little time, requiring a trip deep into the aisles.

Now, picture Cache Memory not as another section in the library, but as a small, remarkably efficient ‘quick access shelf’ placed right next to the librarian – who, in this analogy, is your device’s central processing unit, or CPU. This shelf is special; it doesn’t hold every book, but it keeps copies of the books the librarian (CPU) grabs most frequently. Instead of trekking into the library depths every single time it needs that popular novel or instruction manual, the CPU can just reach out and grab it instantly from the quick access shelf.

This simple idea is the core of what cache memory does. It’s a high-speed, temporary storage area that stores data and instructions that your processor is likely to need again soon. By keeping this frequently used information close and accessible, it drastically cuts down the time your CPU spends waiting, making your entire computing experience feel smoother and faster.

To get a quick, visual rundown of this concept, check out this speedy explanation we put together:

Table of Contents

Why This Speedy Shelf Matters So Much

In the world of computing, speed is paramount. The CPU is like the brain of your device, constantly processing instructions and manipulating data. However, there’s a significant speed mismatch between the lightning-fast CPU and the relatively slower main memory (RAM) or even slower storage (SSD/HDD).

If the CPU had to wait for every single piece of data to be fetched directly from main memory, it would spend most of its time idle, twiddling its thumbs while waiting for information to arrive. This wait time is known as latency. Cache memory exists to bridge this gap.

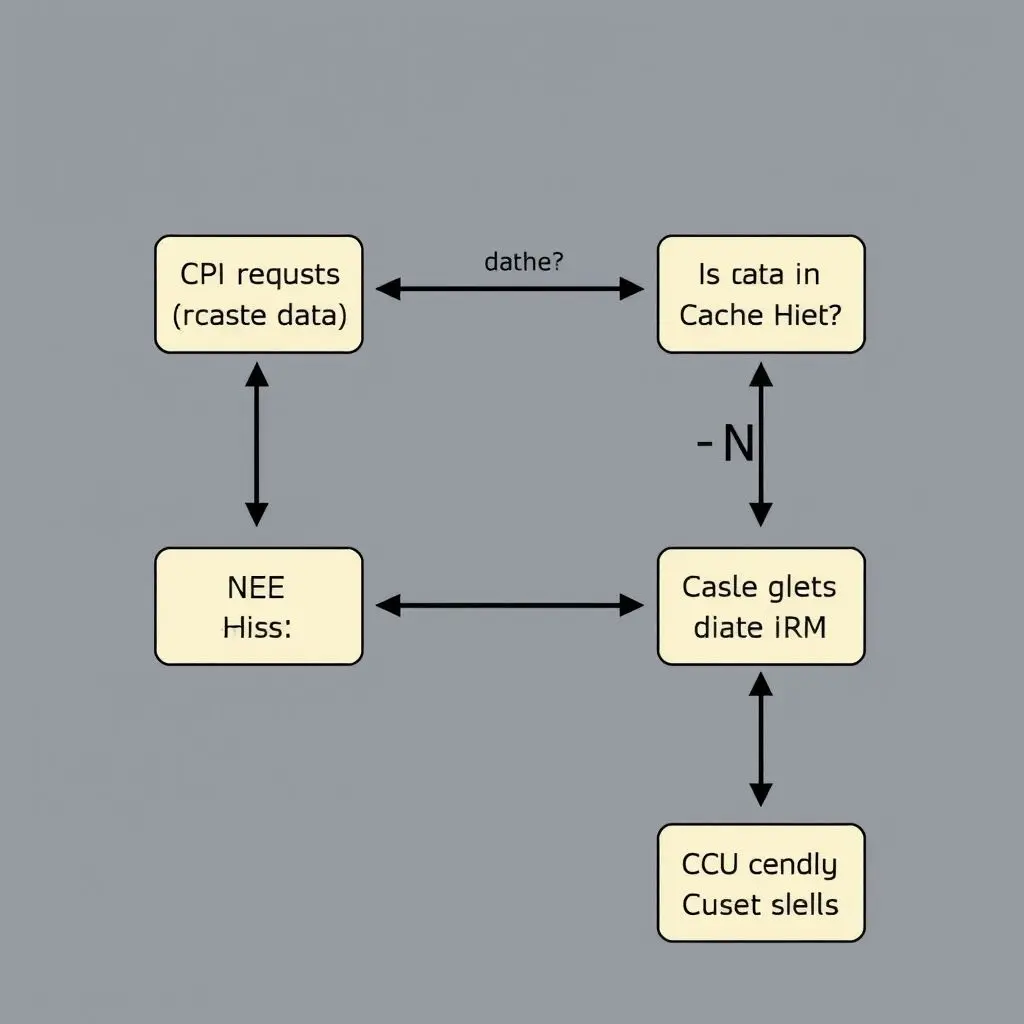

By storing frequently accessed data on a much faster type of memory located physically closer to the CPU, cache dramatically reduces latency. When the CPU needs data, it checks the cache first. If the data is there (a cache hit), it’s retrieved almost instantly. If it’s not (a cache miss), the CPU then has to go to main memory, retrieve the data, and also bring a copy back to the cache, anticipating it might be needed again.

This quick access mechanism is fundamental to modern computing performance. It’s a key reason why:

- Applications launch faster.

- Complex software runs more smoothly.

- Multitasking feels more responsive.

- Websites load quicker (browser cache).

Without cache, even the most powerful processors would be severely bottlenecked by the speed of accessing data from main memory or storage.

Delving a Little Deeper: Types of CPU Cache

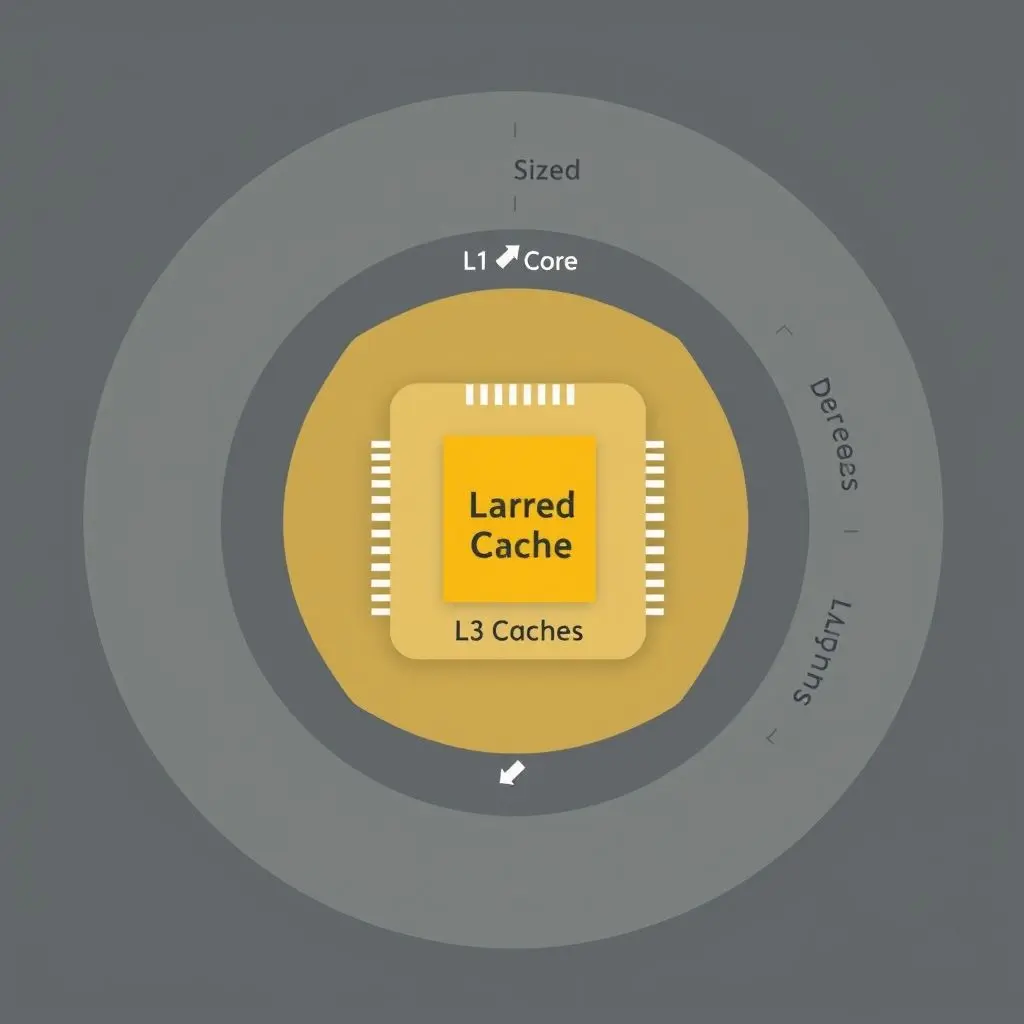

The ‘quick access shelf’ isn’t just one big shelf; it’s often organized into multiple levels, each with different characteristics. Think of it as having several smaller shelves, some right on the librarian’s desk, some just a step away.

On a modern CPU, you’ll typically find at least three levels of cache:

L1 Cache (Level 1 Cache)

This is the smallest and fastest type of cache, located directly on the CPU core itself. It’s like the notes and items the librarian keeps right on their desk for immediate use. L1 cache is typically split into two parts: one for instructions the CPU needs to execute (L1 Instruction Cache) and one for the data those instructions operate on (L1 Data Cache). Its small size allows it to operate at nearly the same speed as the CPU core, providing the quickest possible access.

L2 Cache (Level 2 Cache)

Larger than L1 cache, L2 cache is also very fast, though slightly slower than L1. It might be exclusive to a single core or shared between a couple of cores depending on the CPU design. Think of this as a slightly larger shelf just a step or two from the librarian’s desk. If the CPU doesn’t find the data in L1, it looks in L2 before going to the main memory.

L3 Cache (Level 3 Cache)

This is generally the largest and slowest level of cache, but still significantly faster than main memory. L3 cache is usually shared among all the CPU cores on a processor. It acts as a last resort before accessing main memory. In our analogy, this is the larger ‘staging area’ shelf shared by all the librarians in that section of the library.

The hierarchical structure means the CPU checks the fastest, smallest cache (L1) first. If the data isn’t there, it moves to the next level (L2), and then to L3, and finally to main memory if necessary. This layered approach optimizes for both speed and capacity.

Beyond the CPU: Other Places Cache Helps

While CPU cache is perhaps the most critical for raw processing speed, the concept of caching is used extensively in computing to improve performance across many different areas:

- Browser Cache: Your web browser stores copies of website files (HTML, CSS, images) you’ve visited. The next time you visit that site, the browser can load these files from your local cache instead of downloading them again, making pages load much faster.

- Disk Cache: Your operating system uses a portion of RAM to cache frequently accessed data from your hard drive or SSD, reducing the need for slower physical disk reads.

- Application Cache: Many applications use their own internal caches to store data or results that are likely to be needed again, speeding up operations within that application.

- Web Server Cache: Servers that host websites use caching to store frequently requested web pages or data, allowing them to serve content to many users quickly without having to generate it from scratch each time.

In essence, caching is a fundamental optimization technique: keep frequently accessed information closer and on faster storage to reduce latency and improve performance.

Frequently Asked Questions About Cache Memory

Is Cache Memory the same as RAM?

No, they are different, though they both serve as temporary storage. RAM (Random Access Memory) is much larger than cache and is the main working memory for your computer – where currently running programs and data reside. Cache is a smaller, much faster type of memory used by the CPU to store copies of data and instructions from RAM that it predicts it will need very soon. Think of RAM as the main library shelves and cache as the quick access shelf.

Does more cache mean a faster computer?

Generally, yes, having more cache (especially L2 and L3) can contribute to better performance, particularly in tasks that involve lots of data access. However, it’s not the only factor. CPU clock speed, the number of cores, RAM speed and size, and the speed of your storage (SSD vs. HDD) also play significant roles. A larger cache helps reduce cache misses, meaning the CPU spends less time waiting for data from slower memory.

Can I clear CPU cache?

No, you generally cannot manually ‘clear’ CPU hardware cache (L1, L2, L3) in the way you clear a browser cache. This is managed automatically by the CPU hardware and operating system to optimize performance. What you can often clear is software-level cache, like browser cache, application cache, or operating system temporary files, which helps free up storage space and can sometimes resolve issues caused by outdated cached data.

Why is cache memory so expensive?

Cache memory is typically built using SRAM (Static Random-Access Memory), which is much faster and consumes less power than the DRAM (Dynamic Random-Access Memory) used for main system RAM. However, SRAM is also much more complex to manufacture, requires more transistors per bit of storage, and takes up more physical space on the chip, making it significantly more expensive to produce than DRAM.

The Silent Accelerator of Your Digital Life

While you might not consciously interact with it, cache memory is constantly working behind the scenes, making your digital world feel responsive and fluid. From the moment you power on your device to the instant an application launches, cache memory plays a vital role in delivering the speed and performance we’ve come to expect.

It’s a prime example of how clever engineering tackles fundamental bottlenecks to create a better user experience. So, the next time your device feels incredibly fast, take a moment to appreciate the invisible quick access shelf that’s making it all possible!