Imagine interacting with the world around you, not by physically commanding your limbs, but simply by thinking the action. Sounds like something straight out of a sci-fi flick, right? Well, hold onto your neural networks, because that futuristic vision is rapidly becoming today’s reality thanks to the incredible advancements in mind-controlled prosthetics.

This field sits at the absolute forefront of neurotechnology, bridging the gap between human intention and machine execution. It’s about restoring a fundamental connection for individuals who have lost a limb, offering a level of intuitive control that was once confined to the realm of pure imagination.

Want a quick, visual blast of how this looks in action? We put together a short clip to give you a peek into this mind-bending technology:

Table of Contents

How Does Thought Transform Into Movement? The Science Unpacked

The magic behind mind-controlled prosthetics isn’t magic at all – it’s sophisticated science and engineering working in concert. The core principle relies on something called a Brain-Computer Interface (BCI).

Think of the BCI as a translator. Your brain communicates through electrical signals generated by neurons. When you think about moving your hand, for example, specific patterns of neural activity occur in your motor cortex.

Here’s a breakdown of the key steps:

1. Signal Detection: Reading the Brain’s Language

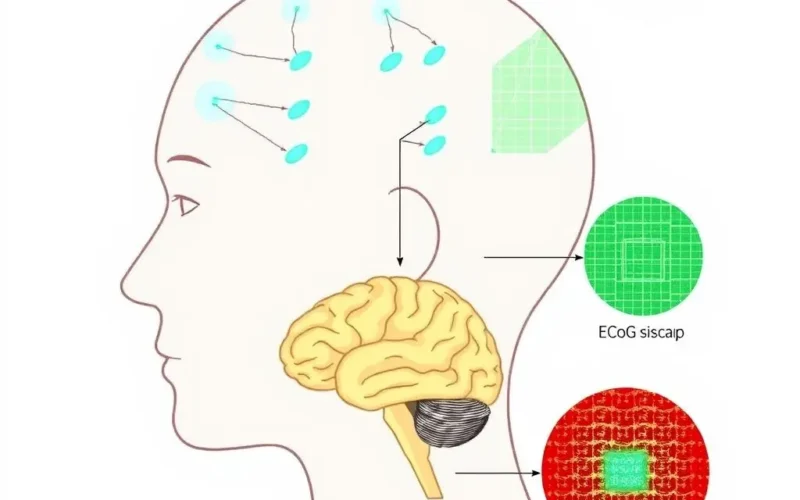

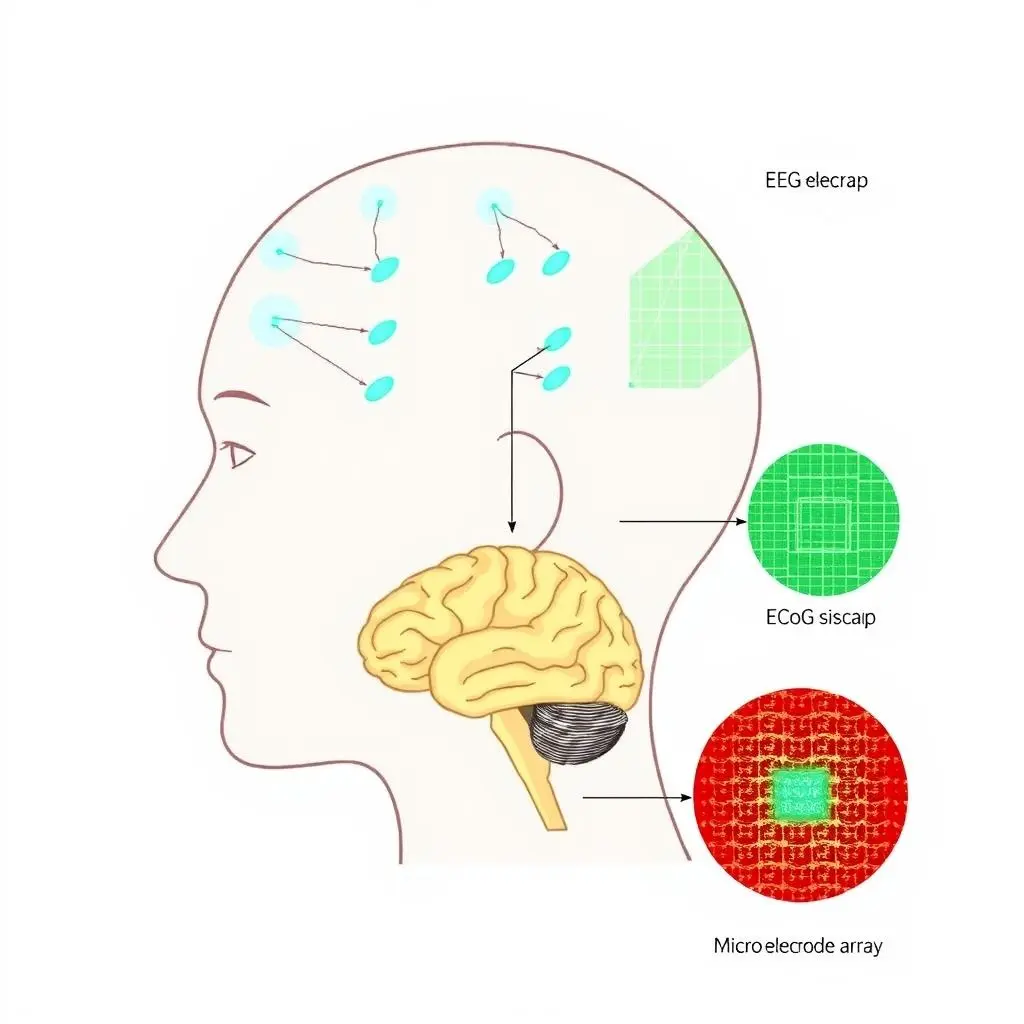

This is where sensors come in. There are different ways to capture brain signals, varying in invasiveness and signal quality:

- Non-Invasive Methods (e.g., EEG – Electroencephalography): Electrodes are placed on the scalp to detect electrical activity. While relatively easy to use, the skull and skin can distort signals, making them less precise.

- Partially Invasive Methods (e.g., ECoG – Electrocorticography): Electrodes are placed directly on the surface of the brain (under the skull). This provides clearer signals than EEG but requires surgery.

- Invasive Methods (e.g., Utah Array, microelectrode arrays): Tiny electrodes are implanted directly into the brain tissue. These offer the highest resolution signals, allowing for the detection of activity from individual neurons, but are the most surgically complex.

For intuitive prosthetic control, especially for complex movements, more invasive methods like ECoG or microelectrode arrays often yield the best results, providing the rich data needed to decode subtle intentions.

2. Signal Processing and Decoding: Translating Thoughts

Once the signals are captured, they are raw electrical noise to a computer. This is where complex algorithms and machine learning step in. The BCI system processes these signals, filtering out noise and identifying the patterns associated with specific intended movements.

This decoding process is akin to learning a new language. The system learns to correlate specific neural patterns with actions like ‘close hand’, ‘rotate wrist’, or ‘extend finger’. This often involves calibration, where the user thinks about performing various movements while the system records the corresponding brain activity.

3. Command Translation: Sending Instructions to the Prosthetic

The decoded intention is then translated into commands the prosthetic limb can understand. These commands control motors and actuators within the prosthetic, instructing it precisely how and where to move.

The goal is real-time translation – for the prosthetic to move almost instantaneously with the user’s thought, making the control feel as natural and seamless as moving a biological limb.

Beyond the Hand: Types and Applications

While upper-limb prosthetics (hands and arms) often capture the most attention due to the complexity of fine motor movements, BCI technology is also being explored for lower-limb prosthetics and even other assistive devices.

Controlling a prosthetic leg intuitively to navigate uneven terrain or adjust gait requires decoding different sets of neural signals, often related to balance, weight distribution, and locomotion intention. This area presents unique challenges but holds immense promise for restoring mobility.

Key Components Working Together

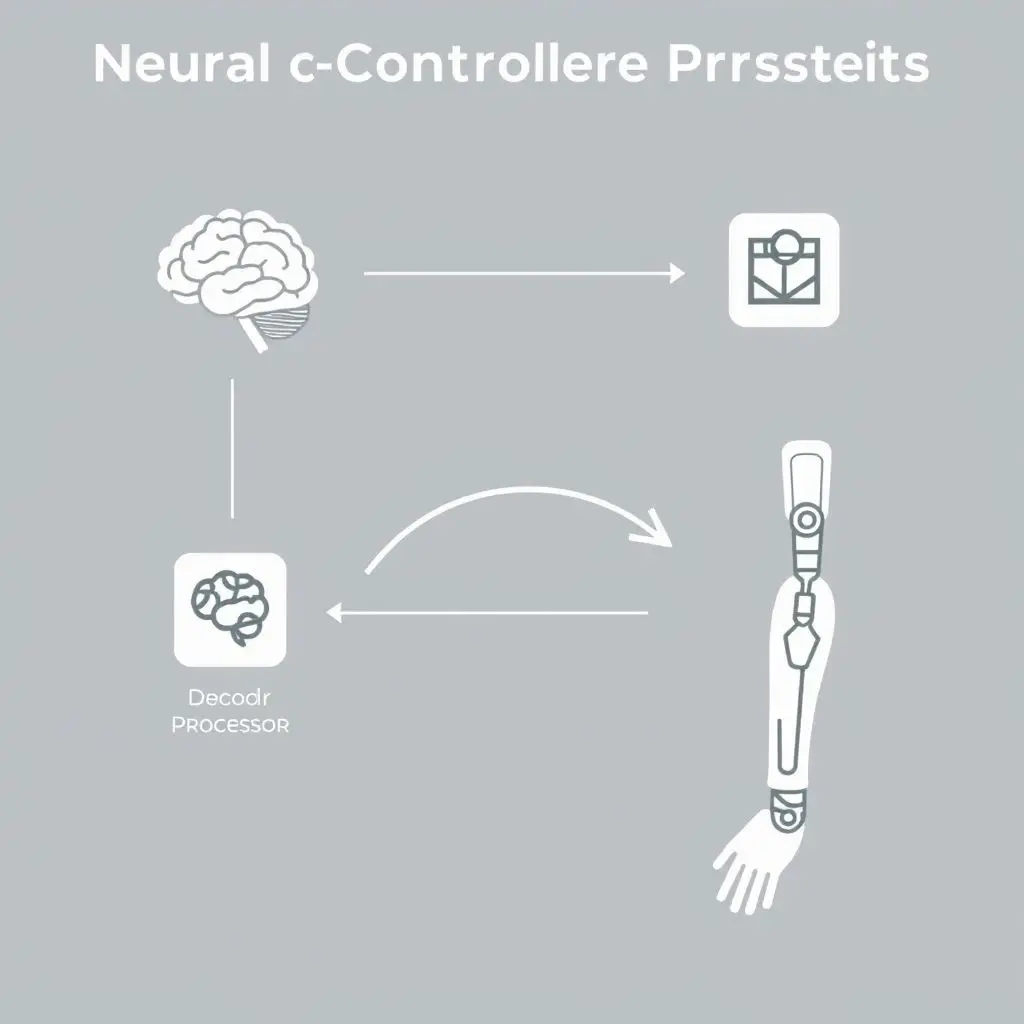

A functional mind-controlled prosthetic system involves several critical pieces:

- The BCI/Sensor Array: The interface that reads brain signals (as discussed above).

- The Decoder/Processor: The hardware and software that process and interpret the brain signals.

- The Prosthetic Limb: The robotic arm, hand, or leg itself, equipped with motors, sensors (to provide feedback), and a power source.

- Feedback Mechanisms: Crucially, for intuitive control, the user needs feedback. This can be visual (watching the prosthetic move), but researchers are also developing ways to provide haptic or sensory feedback, potentially stimulating nerves or the brain to give the user a sense of touch or proprioception (knowing where the limb is in space).

The Life-Altering Impact

The implications of this technology for individuals with limb loss are profound. It’s not just about aesthetics; it’s about:

- Restoring Independence: Enabling tasks that were previously difficult or impossible, from eating and dressing to working and engaging in hobbies.

- Enhanced Dexterity and Control: Moving beyond simple open/close functions to manipulate objects with much greater precision and nuance.

- Improved Quality of Life: The psychological impact of regaining function and feeling more connected to one’s body can be immense.

- Greater Confidence: Feeling empowered and capable in daily interactions.

Navigating the Hurdles: Challenges on the Path

Despite the incredible progress, mind-controlled prosthetics face significant challenges:

- Signal Variability and Noise: Brain signals can be complex and affected by various factors, making consistent, reliable decoding difficult.

- Decoding Complexity: Understanding and accurately predicting the user’s intention from neural signals is an ongoing area of research, especially for complex, multi-joint movements.

- Surgical Risks (for invasive BCIs): Implanting electrodes carries inherent surgical risks, and the long-term stability and biocompatibility of implants are still being studied.

- Cost and Accessibility: These advanced systems are currently very expensive, limiting access for many who could benefit.

- Training and Adaptation: Users often require extensive training to learn how to effectively control the prosthetic using their thoughts.

- Lack of Sensory Feedback: The absence of natural touch and proprioception is a major limitation that researchers are actively trying to address.

Frequently Asked Questions About Mind-Controlled Prosthetics

Are mind-controlled prosthetics available to everyone now?

While the technology exists and is being used in clinical trials and for some individuals, it is not yet widely available commercially for the average person due to cost, complexity, and the need for specialized medical procedures and training.

Is it painful to use a mind-controlled prosthetic?

The prosthetic limb itself is not painful. If an invasive BCI is used, there is a surgical procedure involved, and potential discomfort during the recovery period. The BCI itself typically doesn’t cause pain during operation.

How long does it take to learn how to use one?

Learning times vary greatly depending on the individual, the type of BCI, and the complexity of the prosthetic. It often requires dedicated training sessions over weeks or months.

What kind of movements can these prosthetics perform?

Early systems offered basic grip functions. Modern systems can perform more complex movements, including individual finger control, wrist rotation, and sophisticated grasping patterns. The level of dexterity depends heavily on the BCI technology and decoding algorithms used.

Can they provide a sense of touch?

This is a major area of research. Some advanced systems are experimenting with sensory feedback by stimulating nerves or areas of the brain to give the user a rudimentary sense of touch or pressure from the prosthetic.

Gazing Beyond the Horizon

The journey of mind-controlled prosthetics is far from over; it’s just beginning. Researchers are pushing boundaries daily, aiming for more intuitive control, better sensory feedback, smaller and more powerful systems, and increased affordability.

We might see systems that are easier to implant (or even entirely non-invasive with comparable performance), prosthetics that can learn and adapt to the user’s intentions over time, and potentially even BCIs integrated with biological nervous systems at a deeper level. This technology isn’t just about replacing a limb; it’s about re-establishing a connection, offering a glimpse into a future where the line between human and machine continues to evolve in ways that enhance and restore human capability.