Ever scrolled through your social feed and thought, “Is this all there is?” Or maybe it feels like everyone you see agrees with you. If so, you might be swimming in something far less clear than it appears: a digital filter bubble.

It’s easy to think that hopping online gives you a window to the world. But increasingly, that window is curated just for you by complex algorithms. These digital gatekeepers watch what you click, what you like, what you search for, and even who your friends are. Their primary goal? Keep you engaged. And the best way to do that, they figure, is to show you more of what you (or people like you) seem to prefer.

This personalized stream is your filter bubble in action. And while it might seem harmless, maybe even convenient, it’s the first step towards a more concerning phenomenon: the echo chamber. Here, dissenting voices are subtly filtered out, while your existing opinions and beliefs are reflected back, amplified by similar viewpoints from others. It’s a feedback loop that can narrow your perspective without you even realizing it.

Just how are your online experiences being shaped? Take a quick moment to peek into this concept:

If that made you pause and look a little differently at your own digital world, read on. Let’s dive deeper into what these concepts really mean, how they work, and most importantly, what you can do about them.

Table of Contents

What Exactly is a Filter Bubble?

The term “filter bubble” was popularized by internet activist Eli Pariser in his 2010 TED Talk and subsequent book. He described it as the intellectual isolation that results from personalized searches where a website algorithm selectively guesses what information a user would like to see based on information about the user, such as location, past click behavior, and search history.

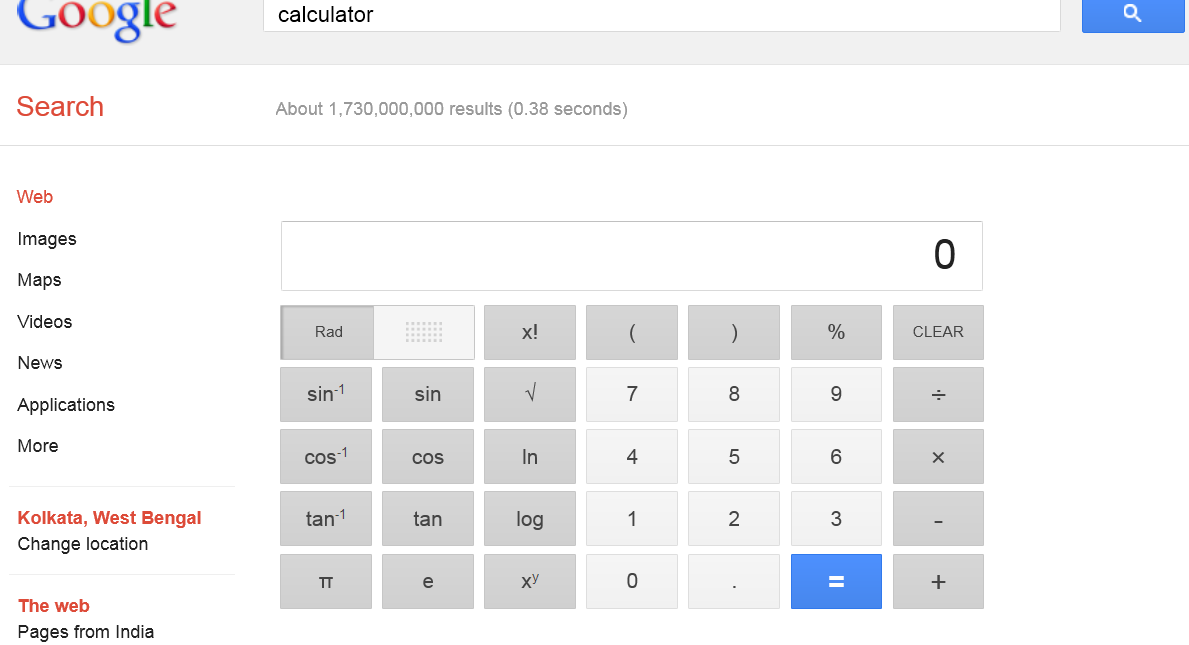

Think of it as your own unique universe of information online. Google tailors your search results. Facebook decides which friends’ posts appear at the top of your feed and which news articles you’re shown. Netflix recommends movies based on your viewing history. E-commerce sites show you products similar to what you’ve browsed before.

These systems aren’t inherently malicious. They are designed to make your online experience more efficient and engaging for *you*. For businesses, personalization means you spend more time on their platform and are more likely to click on relevant ads or products. But the side effect is that you are exposed less and less to information, perspectives, or content that falls outside of your perceived interests or preferences.

From Bubble to Chamber: The Echo Effect

If a filter bubble is about the information you *are* shown (or, more importantly, *not* shown) based on algorithms, an echo chamber is the social and psychological space that often develops as a result, particularly on social media platforms.

Within an echo chamber, beliefs are amplified or reinforced by communication and repetition inside a closed system. You follow people who share your views, the algorithms show you more content from those people (and similar others), and you interact primarily with those who agree with you. Dissenting opinions, if they appear at all, are often dismissed, mocked, or simply drowned out.

It’s like being in a room where everyone is saying the same thing louder and louder. Instead of engaging with diverse ideas, you hear echoes of your own thoughts and those of your chosen group reflected back at you. This is often compounded by human psychology, specifically confirmation bias – our natural tendency to favor information that confirms our existing beliefs and dismiss information that contradicts them.

Why Should You Care? The Dangers of Living in a Digital Bubble

While personalized feeds might seem harmless, perhaps even convenient, living perpetually within a filter bubble that evolves into an echo chamber has significant consequences, both for individuals and society:

Narrowed Perspectives and Lack of Empathy

When you’re only exposed to information and viewpoints that align with your own, it becomes difficult to understand where others are coming from. This can lead to a lack of empathy and make constructive dialogue across differences nearly impossible. You might genuinely believe your perspective is the *only* rational one because it’s the only one you ever encounter.

Increased Polarization and Division

Echo chambers fuel division. As groups become more isolated and their beliefs are constantly reinforced without challenge, their views can become more extreme. This is particularly visible in political discourse, where opposing sides inhabit entirely different online realities, seeing different “facts” and hearing different narratives, leading to entrenched positions and animosity.

Vulnerability to Misinformation and Disinformation

Inside an echo chamber, false information or propaganda that aligns with the group’s existing beliefs can spread rapidly and unchecked. Because alternative viewpoints or fact-checks are often filtered out or distrusted, members are less likely to encounter corrections or critical analyses, making them highly susceptible to believing and spreading falsehoods.

Stunted Critical Thinking

If you’re never challenged or exposed to counterarguments, your ability to critically evaluate information can weaken. Developing critical thinking skills requires grappling with complex ideas and conflicting evidence, something that filter bubbles and echo chambers actively discourage.

Stepping Outside the Walls: How to Navigate a Curated Online World

Recognizing that you might be in a bubble is the crucial first step. Escaping it requires conscious effort and a willingness to seek out diverse perspectives. Here are some strategies:

- Diversify Your News Sources: Don’t rely on just one or two websites or social media feeds for information. Seek out reputable news organizations with different editorial stances (while being aware of their potential biases too). Read local, national, and international news.

- Actively Seek Out Different Viewpoints: On social media, intentionally follow people, groups, or pages that hold views different from your own. Engage with their content respectfully to understand their perspective, even if you disagree. Be prepared for challenging conversations and practice civil discourse.

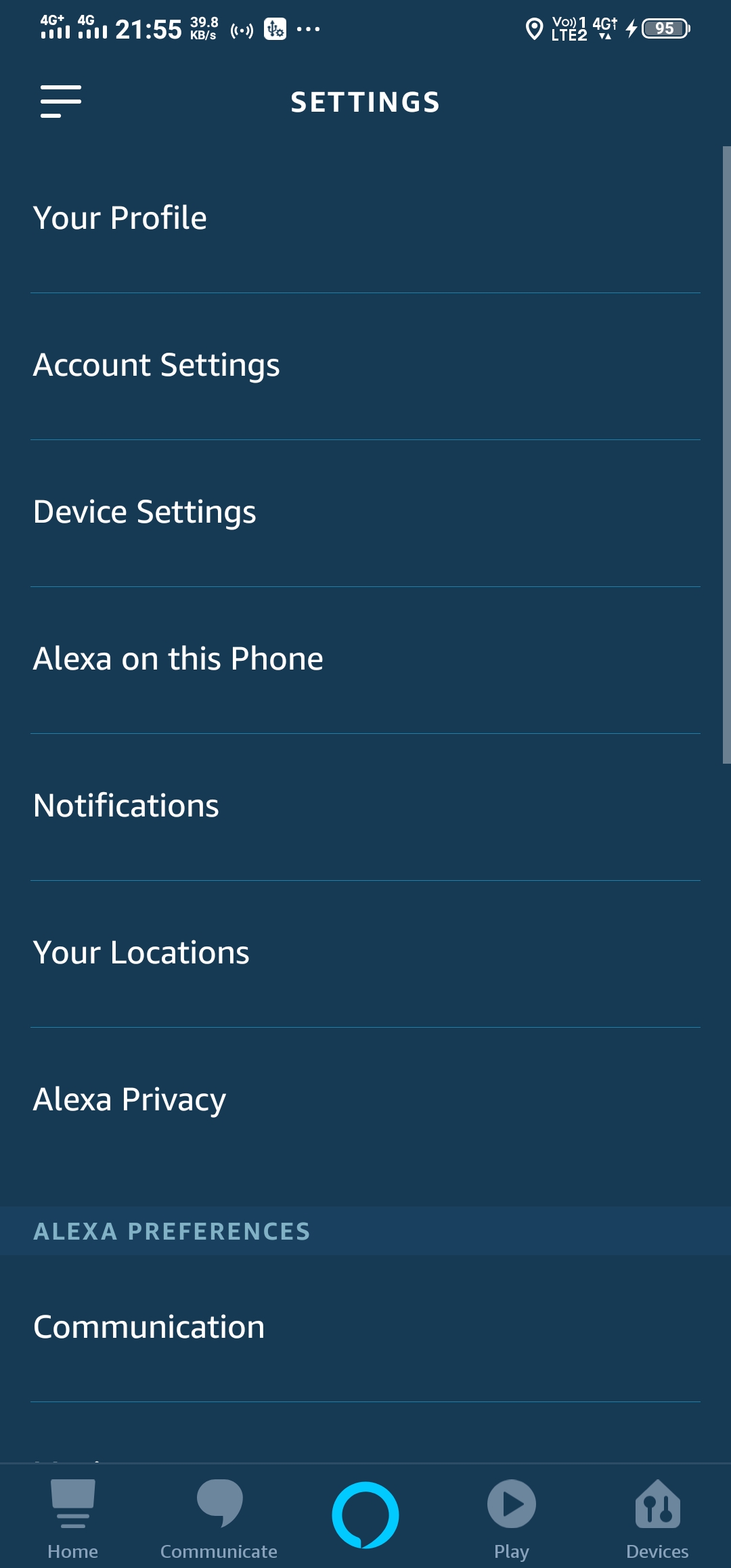

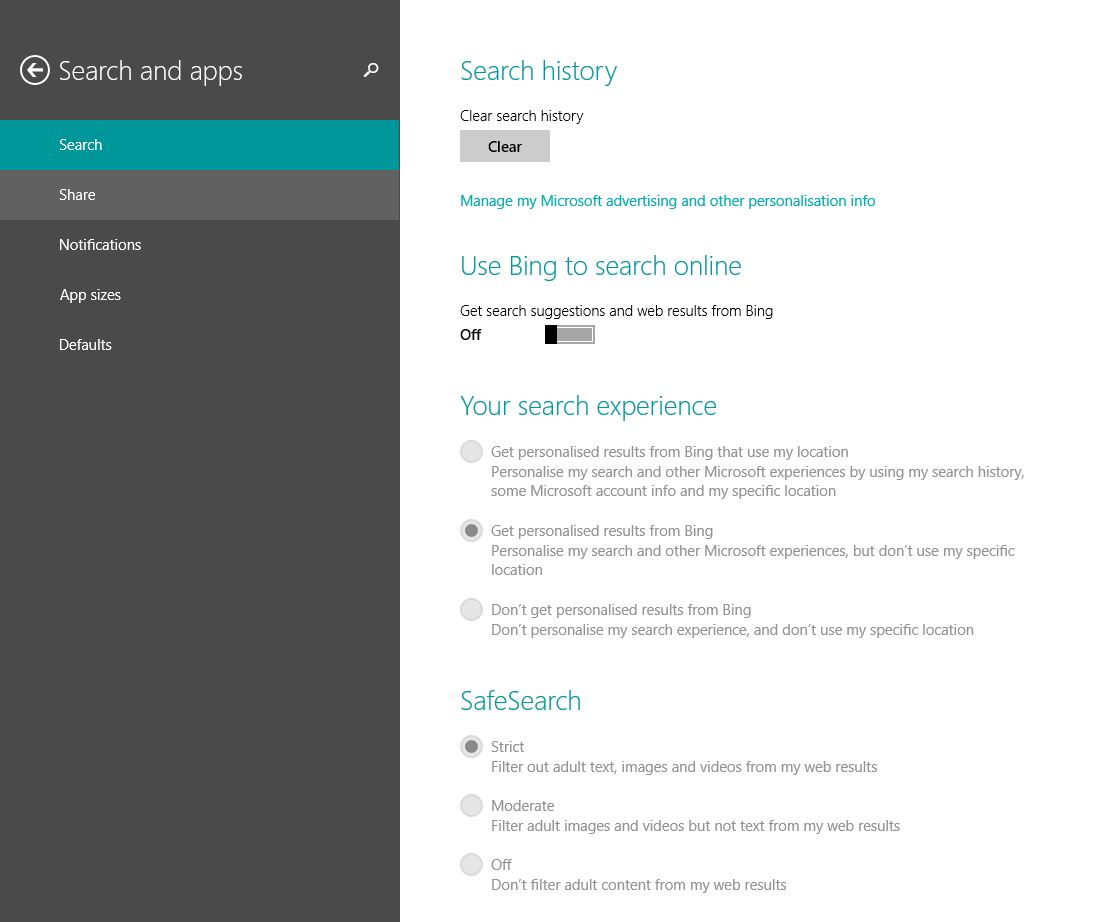

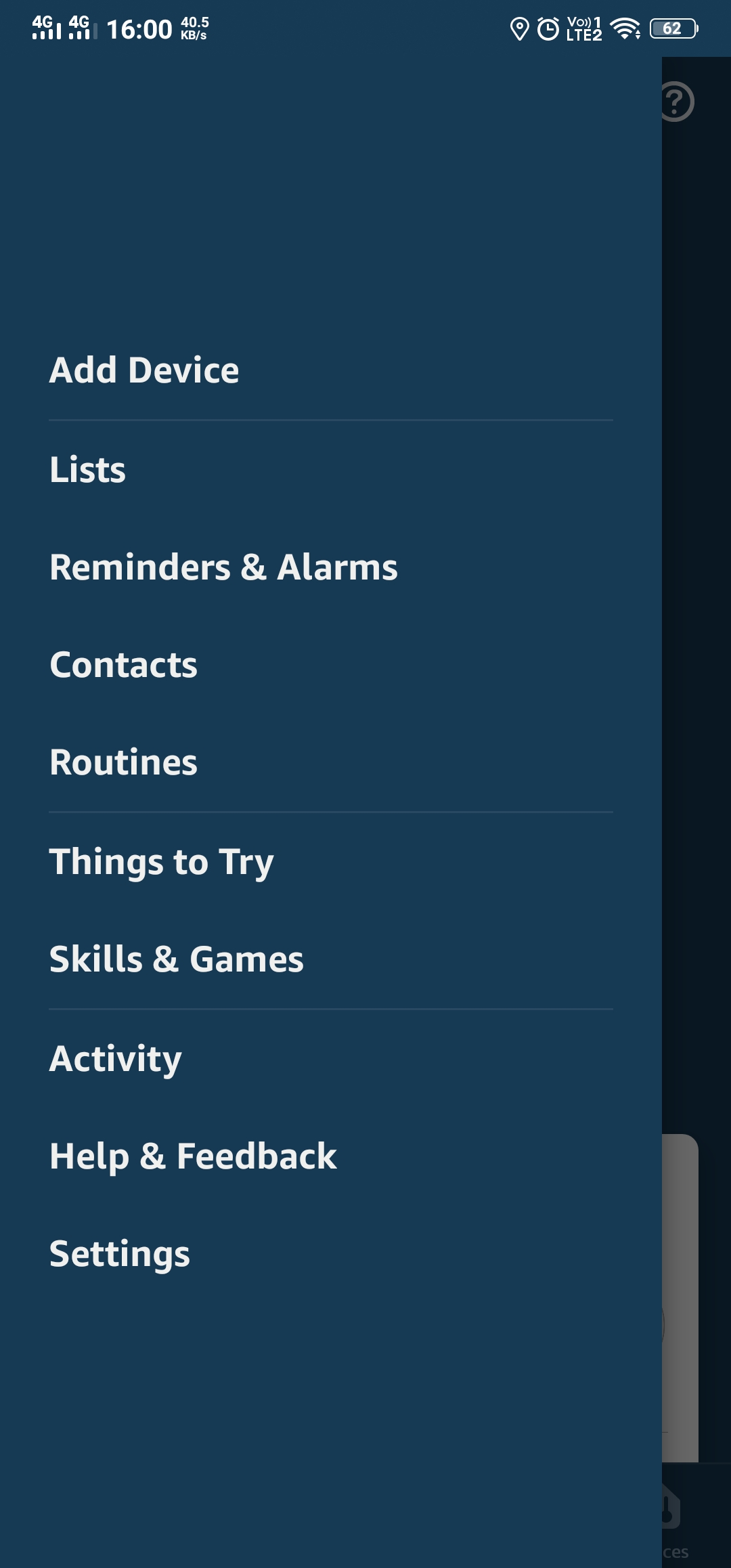

- Use Search Engines Mindfully: Be aware that your search results are personalized. Try using privacy-focused search engines or incognito/private browsing modes to see slightly less tailored results (though advanced personalization can still track you). Consider searching for opposing arguments or information on topics you feel strongly about.

- Question Everything (Even What You Agree With): Cultivate a habit of critical thinking. Ask: Who created this content? What is their motive? What evidence supports this claim? Are there other perspectives or information I’m missing?

- Talk to People Offline: Engage in conversations with friends, family, and colleagues who have different backgrounds and beliefs. Real-world interactions can expose you to perspectives and nuances that online environments often flatten.

- Explore Platforms Beyond Your Usual Haunts: Different online platforms attract different user bases and content styles. Venturing into spaces you don’t normally frequent can expose you to new ideas (exercise caution and prioritize safe, reputable platforms).

Frequently Asked Questions About Bubbles and Chambers

Q: Are filter bubbles and echo chambers the same thing?

A: Not exactly, but they are closely related and one often leads to the other. A filter bubble is about personalized *content filtering* by algorithms. An echo chamber is the *resulting social phenomenon* where similar beliefs are amplified and dissenting views are excluded, often within online communities.

Q: Are algorithms solely responsible for this?

A: Algorithms play a huge role by controlling what information you see. However, user behavior is also critical. We tend to click on things we agree with, follow people like ourselves, and unfollow or block those we disagree with, reinforcing the algorithmic filtering and actively building our own echo chambers.

Q: Is all personalization bad?

A: Not necessarily. Personalization can be helpful for discovering relevant products or content you genuinely enjoy. The issue arises when it severely limits your exposure to diverse information and viewpoints, creating an isolated information environment.

Q: How can I tell if I’m in an echo chamber?

A: Ask yourself: Do I rarely encounter opinions different from my own online? When I do, are they presented in a dismissive or negative light? Do I feel increasingly confident that my viewpoint is the only correct one based on what I see online? Do the same pieces of information or arguments keep reappearing in my feed? If you answer yes to these, you’re likely experiencing an echo chamber effect.

Q: What role do platforms have in this?

A: Platforms design and deploy the algorithms that create filter bubbles. While their goal is engagement, they have a responsibility to consider the societal impact of their systems and potentially design them in ways that encourage exposure to diverse viewpoints, although the balance between personalization and breadth is a complex challenge.

Thinking Beyond the Algorithmic Walls

Navigating the online world in the age of advanced algorithms and personalized feeds requires active participation. It’s no longer a passive experience if you want to get a full picture. Being aware of filter bubbles and echo chambers is the first step; deliberately seeking out diverse information and engaging critically with what you find is how you ensure you’re not just hearing echoes of yourself and your chosen group.

The health of public discourse and your own informed perspective depend on your willingness to step outside the comfortable, algorithmically-defined walls and explore the wider, more complex landscape of information and ideas that exists online.