Ever unlock your phone with just a glance and marvel, even for a split second, at the sheer wizardry? Or perhaps you’ve seen social media platforms magically suggest tags for friends in your photos, almost as if they have a sixth sense. This isn’t arcane magic, though it certainly feels like it sometimes. It’s the power of facial recognition technology, and today, we’re pulling back the curtain to reveal the ‘secret sauce’ – the intricate steps that allow a machine to know you.

Table of Contents

First Things First: What Exactly is Facial Recognition?

At its core, facial recognition is a type of biometric technology. Just like fingerprints or iris scans, it uses unique biological characteristics to identify or verify a person. Instead of ridges on a fingertip, it focuses on the unique geometry of your face. From unlocking your high-tech gadgets to more complex security applications, its presence is becoming increasingly woven into the fabric of modern life.

The core idea might sound straightforward, but the journey from a simple image to a confirmed identity is a fascinating dance of algorithms and data. Before we dissect each step like a digital frog in a biology class (don’t worry, it’s far less messy!), how about a lightning-fast visual recap? Our own creative tech-gnomes have been busy, and they’ve cooked up a YouTube Short that gives you the whirlwind tour. Think of it as the appetizer before the main course – quick, delightful, and sets the stage perfectly!

The Inner Workings: A Step-by-Step Breakdown

So, how does this digital detective actually recognize a face? It’s generally a multi-stage process. Let’s break it down:

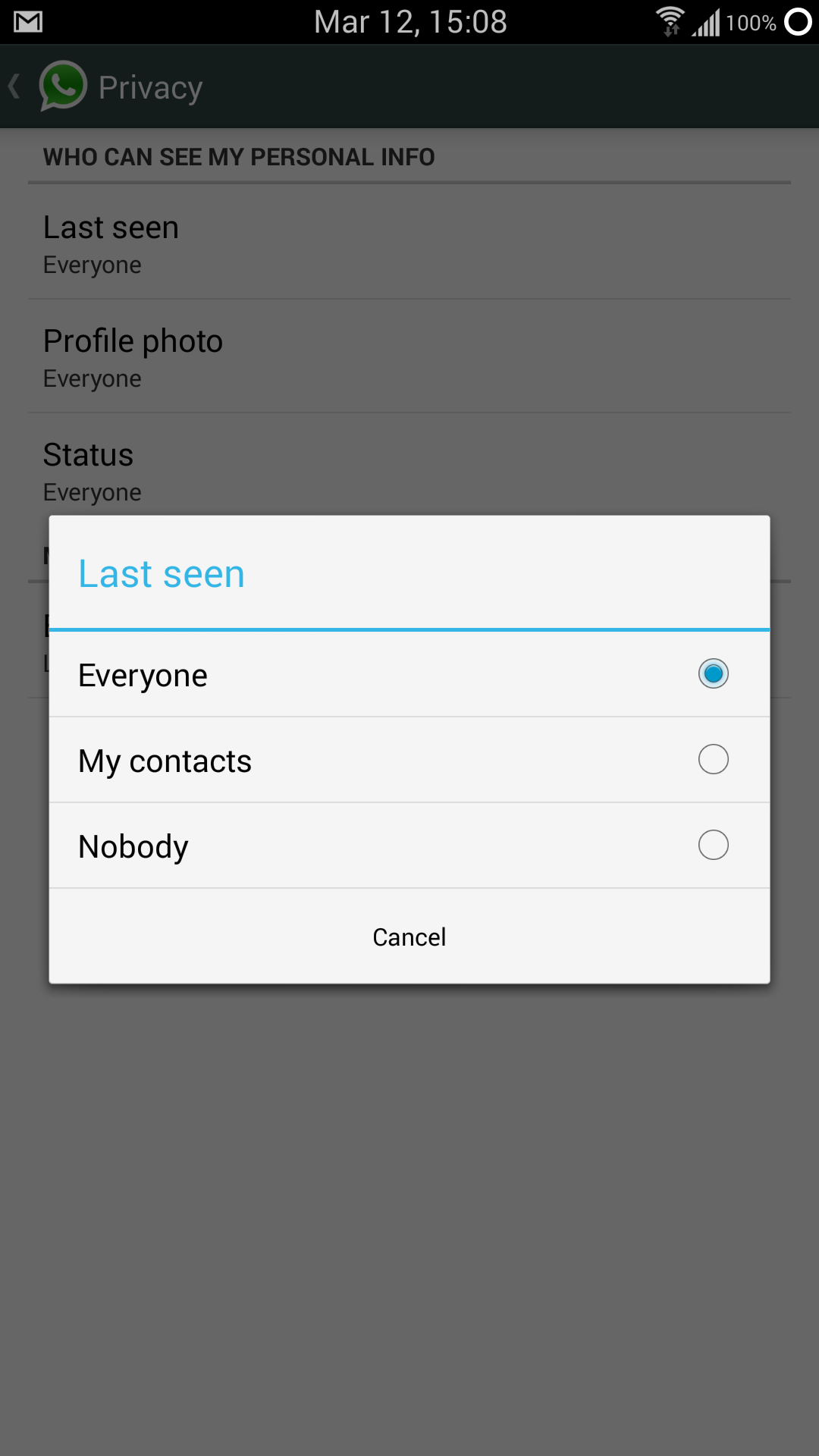

Step 1: Face Detection – “Ah, There’s a Face!”

Before any recognition can happen, the system needs to know if there’s a face in the picture or video feed, and if so, where it is. This is face detection. Algorithms scan the input, looking for patterns indicative of a human face – think two eyes, a nose, and a mouth in a common configuration. Early methods like the Viola-Jones algorithm used Haar-like features, while modern systems often employ sophisticated machine learning models, particularly Convolutional Neural Networks (CNNs), for higher accuracy even in challenging conditions like poor lighting, odd angles, or partial obstructions.

Once detected, the face region is isolated for the next stage.

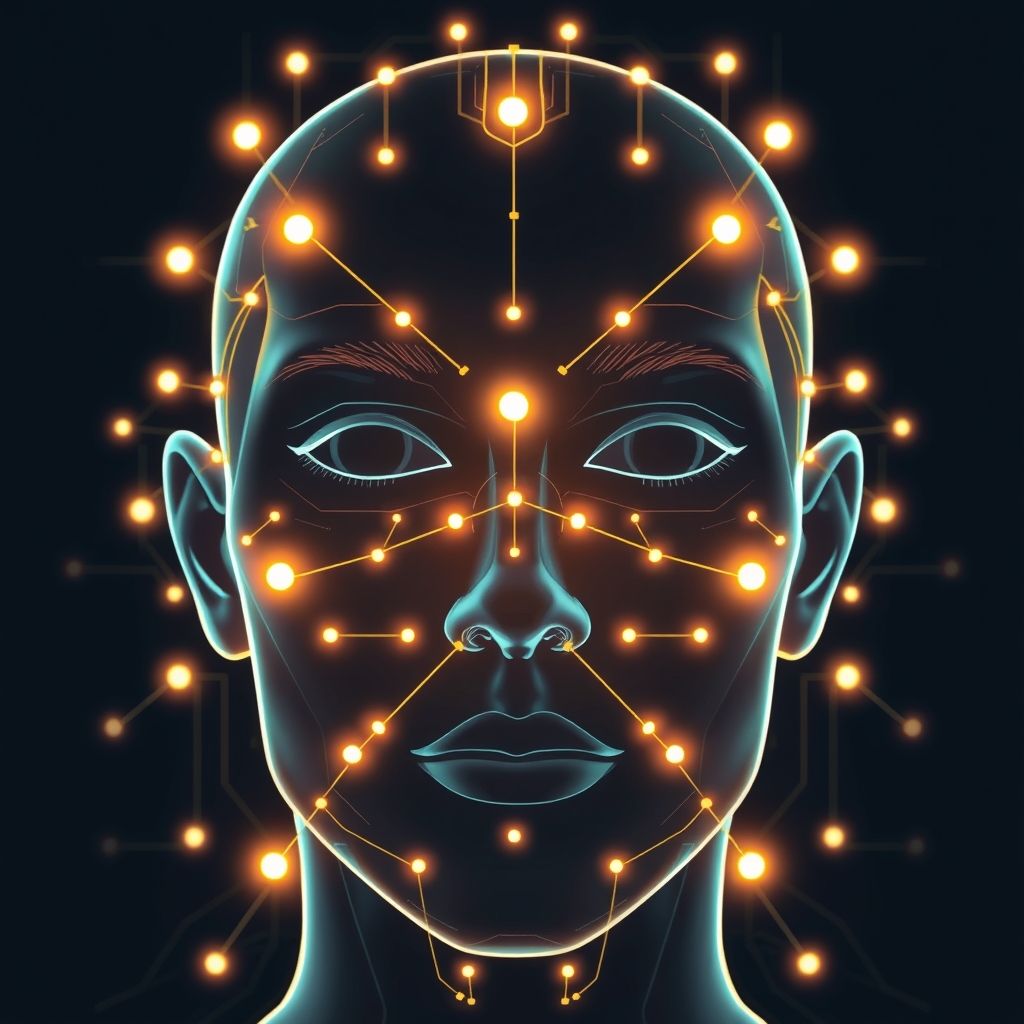

Step 2: Feature Analysis & Extraction – “Mapping Your Unique You”

With a face detected, the system gets to work analyzing its unique characteristics. This is where it identifies facial landmarks or nodal points. These are key features like:

- Distance between the eyes

- Width of the nose

- Shape of the cheekbones

- Length of the jawline

- Depth of the eye sockets

- Contour of the lips, ears, and chin

Traditional systems might use geometric approaches, measuring these distances and ratios. More advanced systems, especially those powered by deep learning, can extract a much richer set of features, often ones that aren’t easily interpretable by humans but are highly discriminative. The system essentially creates a detailed map of your facial structure. This can be done using 2D images or, for enhanced accuracy, 3D sensor data that captures the depth and contour of the face.

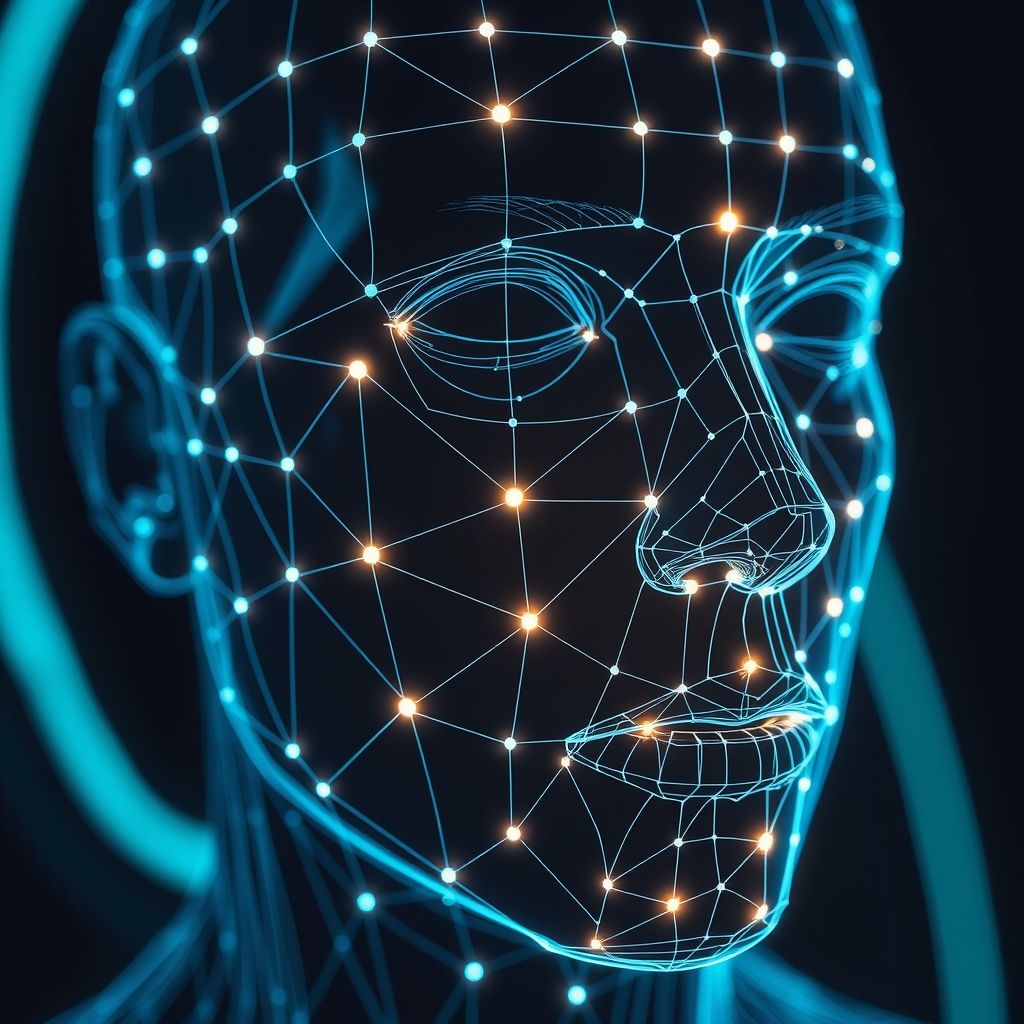

Step 3: Faceprint Creation – “Your Face as a Digital Code”

This intricate map of your facial features is then converted into a unique numerical representation. This digital signature is often called a faceprint, facial template, or biometric signature. Think of it as a highly complex mathematical formula or a vector of numbers that uniquely describes your face. It’s not an image of your face but rather a set of data points derived from it. Different algorithms, such as Principal Component Analysis (PCA), Linear Discriminant Analysis (LDA), or deep learning embeddings (like those from FaceNet or ArcFace), are used to generate this compact and distinctive code.

The key here is that this faceprint should ideally be consistent for the same person across different images and lighting conditions, yet significantly different for different individuals.

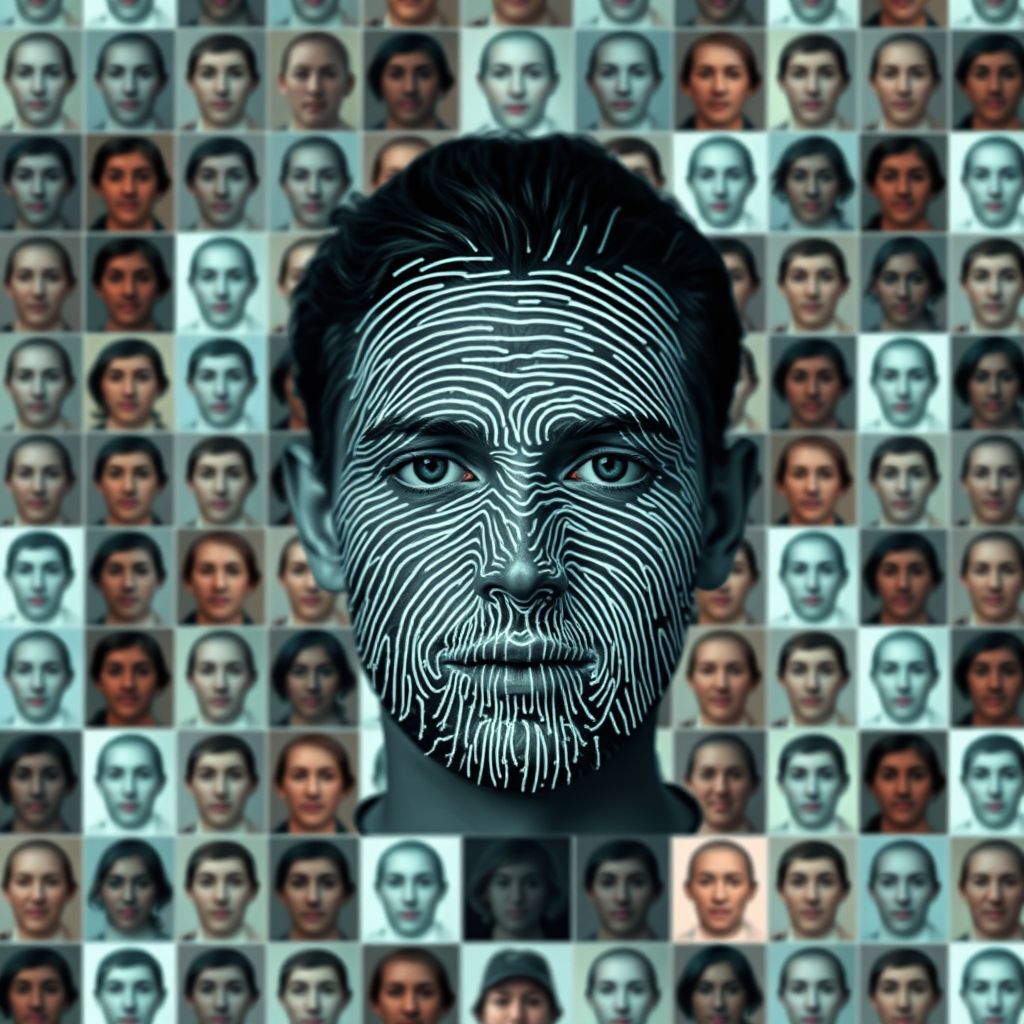

Step 4: Matching – “Is That Really You?”

The newly created faceprint is now ready to be compared. This stage can take two primary forms:

- Verification (1:1 Match): This is about confirming a person’s claimed identity. For example, when you unlock your phone with your face, your live faceprint is compared against your stored faceprint. The system asks, “Are you who you say you are?” If the comparison score exceeds a certain threshold, access is granted.

- Identification (1:N Match): Here, the system tries to find a match for the live faceprint within a database of many stored faceprints. This is used in scenarios like finding a person in a crowd or tagging friends in photos on social media. The system asks, “Who is this person?” It compares the live faceprint to all entries in the database and returns the closest match(es) if the similarity score is high enough.

The matching process involves calculating the ‘distance’ or ‘similarity’ between the new faceprint and those in the database. A smaller distance or higher similarity score indicates a likely match.

The Engine Room: Algorithms Powering the Process

The magic of facial recognition isn’t just in the steps but in the sophisticated algorithms that drive each one. Historically, techniques like Eigenfaces (which used PCA to find variations in a collection of face images) and Fisherfaces (which used LDA to maximize separation between classes) were prominent.

However, the field has been revolutionized by Deep Learning, particularly Convolutional Neural Networks (CNNs). CNNs are inspired by the human visual cortex and are incredibly effective at automatically learning hierarchical features from vast amounts of image data. They can learn to detect faces, extract robust features, and perform matching with accuracies that were previously unattainable. Models like DeepFace (Facebook), FaceNet (Google), and others have set new benchmarks in performance. These models are trained on massive datasets containing millions of face images, allowing them to learn intricate patterns and variations.

Real-World Encounters: Applications of Facial Recognition

You’re likely encountering facial recognition more often than you realize:

- Security and Access Control: Unlocking smartphones, laptops, and secure facilities.

- Law Enforcement: Identifying suspects from surveillance footage or mugshot databases (use is often debated).

- Social Media: Automatic photo tagging suggestions.

- Airports and Border Control: Verifying passenger identities for smoother and more secure travel.

- Retail: Personalizing customer experiences or analyzing foot traffic (with significant privacy considerations).

- Healthcare: Identifying patients to prevent medical errors or monitor health conditions.

- Smart Homes: Personalizing settings based on who is present.

The Double-Edged Sword: Challenges, Ethics, and Concerns

Despite its advancements, facial recognition technology is not without its flaws and controversies:

- Accuracy and Bias: Systems can exhibit varying accuracy rates based on age, gender, and ethnicity. Biases in training data can lead to poorer performance for underrepresented groups, raising fairness concerns.

- Privacy: The potential for widespread surveillance without consent is a major worry. How is facial data collected, stored, and used? Who has access to it?

- Security Risks: Databases of faceprints can be targets for hackers. There’s also the risk of ‘spoofing’ attacks, where systems are fooled by photos, videos, or 3D masks (though anti-spoofing technologies are improving).

- Misidentification: False positives (incorrectly matching an innocent person) or false negatives (failing to identify a known individual) can have serious consequences, especially in law enforcement.

- Lack of Regulation: The legal framework governing the use of facial recognition is still evolving in many parts of the world, leading to debates about its ethical deployment.

- The ‘Chilling Effect’: Constant surveillance, or the perception of it, can deter people from participating in public life or expressing dissent.

Peeking into Tomorrow: The Future of Facial Recognition

The field of facial recognition is continuously evolving. We can expect:

- Improved Accuracy and Robustness: Ongoing research aims to make systems more reliable across diverse demographics and challenging conditions (e.g., low light, occlusions like masks).

- Enhanced Anti-Spoofing: More sophisticated methods to detect and prevent attempts to trick the system.

- Emotion Recognition: While a related field, advancements may allow systems to infer emotional states from facial expressions, opening new applications but also raising further ethical questions.

- On-Device Processing: More processing may happen directly on user devices (like your phone) to enhance privacy, rather than sending data to the cloud.

- Ethical AI Development: A growing emphasis on developing and deploying AI technologies, including facial recognition, in a responsible and ethical manner, with greater transparency and accountability.

Disclaimer: The field of AI and facial recognition is rapidly advancing. Capabilities and societal discussions are constantly evolving.

The Face of the Future is Here, For Better or Worse

Facial recognition technology, once the stuff of science fiction, is now firmly embedded in our reality. From the convenience of unlocking your phone with a smile to the complexities of its use in public safety, its impact is undeniable. Understanding how it works is the first step towards a more informed discussion about how it should be used. It’s a powerful tool, and like any powerful tool, it comes with immense potential and significant responsibilities.

Frequently Asked Questions (FAQs)

- Is facial recognition 100% accurate?

- No, facial recognition is not 100% accurate. Accuracy varies depending on the algorithm, the quality of the image, lighting conditions, pose, and demographic factors. While top systems can achieve high accuracy, errors (false positives and false negatives) can still occur.

- How is my facial data stored when I use it to unlock my phone?

- On many modern smartphones (like iPhones with Face ID or Android phones with similar features), the facial data (faceprint) is typically encrypted and stored securely on a dedicated chip within the device itself (e.g., Apple’s Secure Enclave). It’s generally not sent to the cloud or accessible to the operating system or other apps, enhancing user privacy.

- Can facial recognition be fooled?

- Early or less sophisticated systems could sometimes be fooled by high-quality photos or videos. However, modern systems often incorporate advanced anti-spoofing technologies, such as 3D depth sensing, liveness detection (checking for blinking or slight movements), and infrared imaging, making them much harder to trick.

- What’s the difference between facial detection and facial recognition?

- Facial detection is the process of locating and identifying the presence of human faces in an image or video. It answers the question, “Is there a face here?” Facial recognition goes a step further: after a face is detected, it aims to determine whose face it is by comparing its features to a database of known faces. It answers, “Whose face is this?”

- Are there laws regulating facial recognition?

- The legal landscape for facial recognition is still developing and varies significantly by region and country. Some areas have implemented strict regulations or moratoriums on its use, particularly by government agencies, while others have fewer restrictions. There is ongoing global debate about the need for comprehensive laws to address privacy, bias, and accountability.