Imagine a vehicle navigating busy streets, highways, and parking lots entirely on its own. It accelerates, brakes, steers, and obeys traffic laws – all without a human hand on the wheel. But how on earth does it see? It doesn’t possess biological eyes, so what allows it to understand the complex, ever-changing environment around it?

The answer isn’t a single magic bullet, but a sophisticated network of digital senses, working in concert. Think of it not as ‘seeing’ like a human, but as gathering, processing, and interpreting massive amounts of data from multiple sources in real-time. This intricate system allows the car’s ‘brain’ to build a dynamic, detailed picture of its surroundings.

Table of Contents

The Digital Senses: More Than Just Eyes

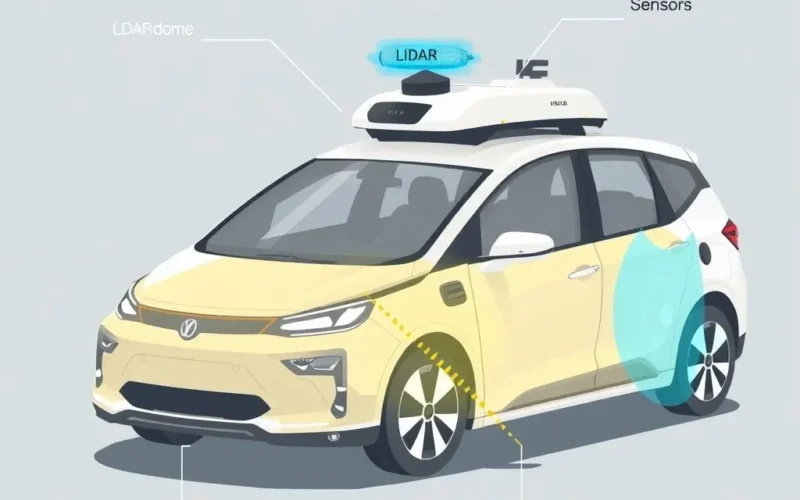

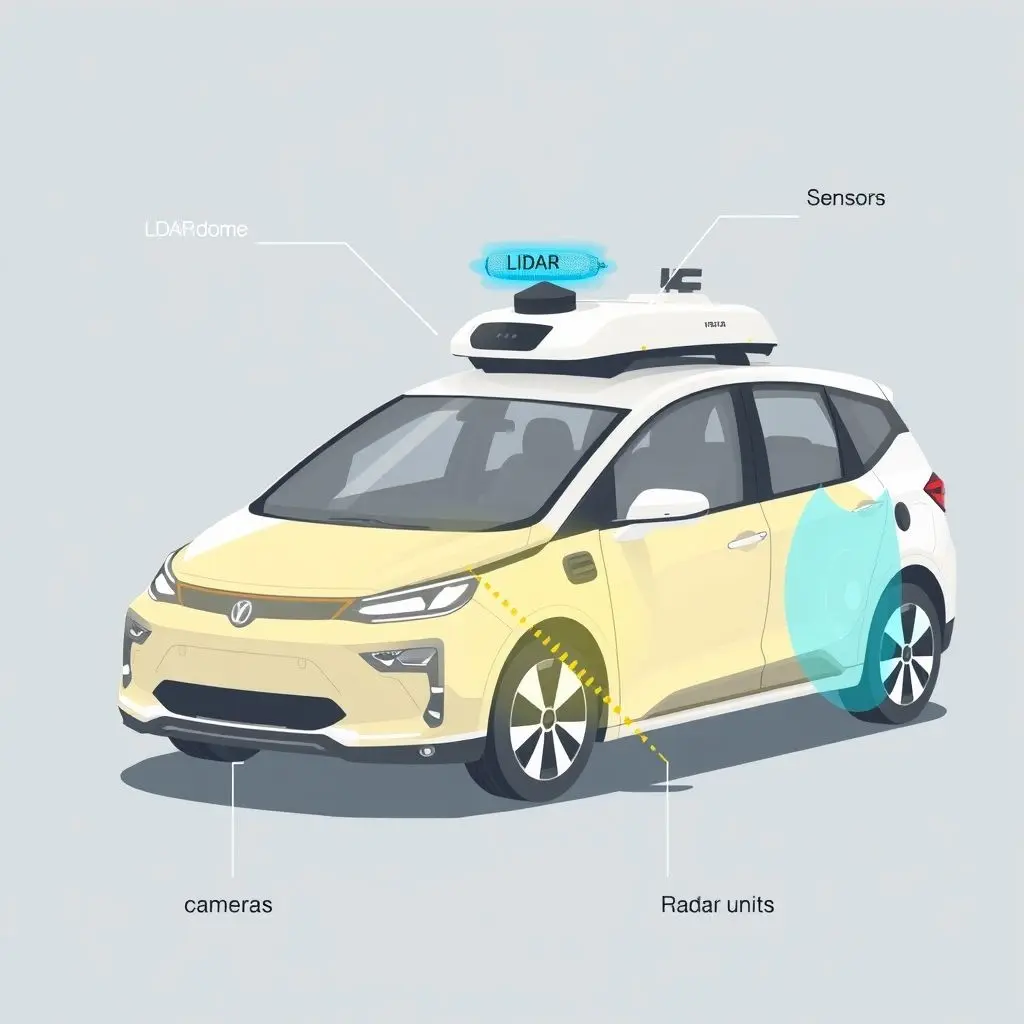

A self-driving car relies on an array of sensors, each providing a different type of information about the world. While radar and sometimes ultrasonic sensors play roles, the primary ‘eyes’ of the system are typically LiDAR and cameras. These two technologies complement each other, providing both precise distance/shape information and detailed visual context.

LiDAR: The Laser Mapping Marvel

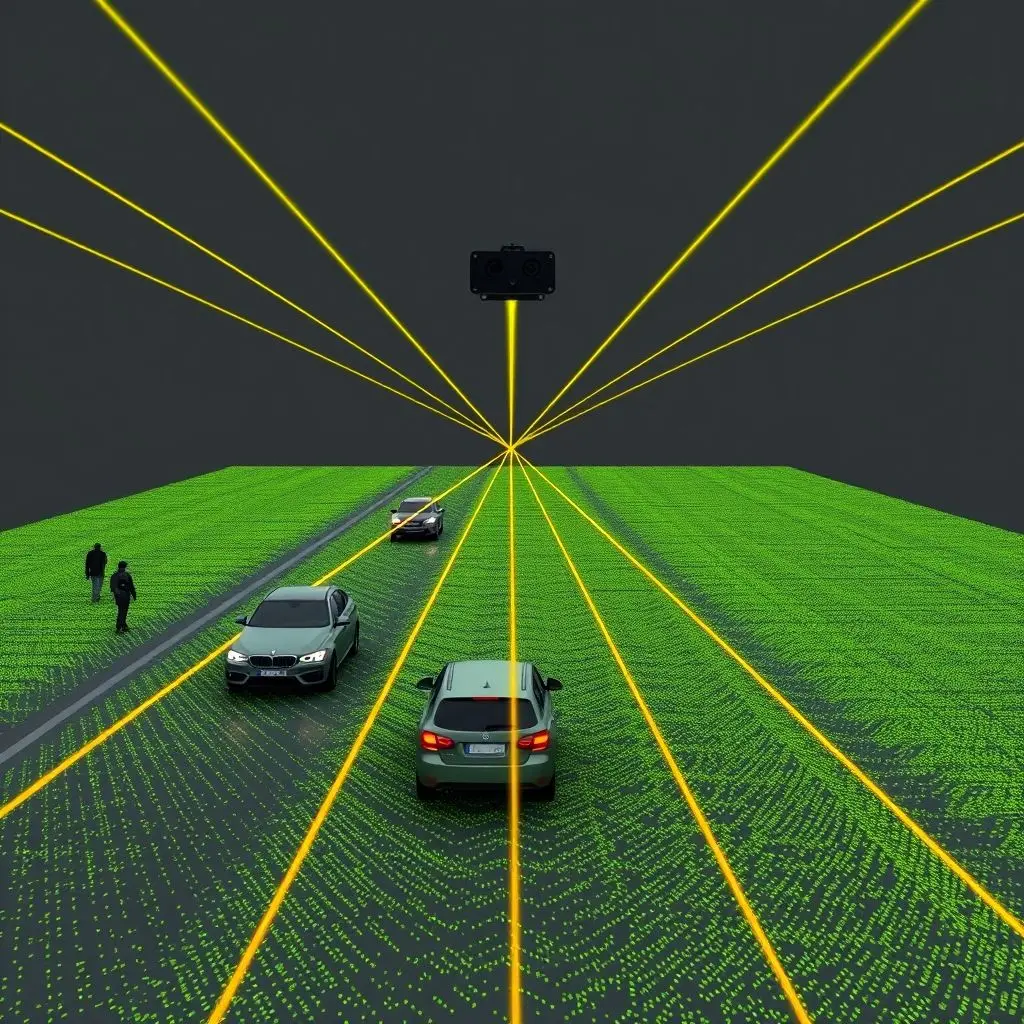

LiDAR stands for Light Detection and Ranging. Often seen as a spinning unit on the roof (though newer designs are more integrated), this sensor is a master of spatial measurement.

How LiDAR Works

LiDAR units emit millions of rapid laser pulses every second. These pulses bounce off objects in the environment (cars, pedestrians, buildings, trees) and return to the sensor. By precisely measuring the time it takes for each pulse to return, the LiDAR unit can calculate the distance to the object it hit. This process creates a dense ‘point cloud’ – millions of individual data points representing the shapes and positions of everything within the sensor’s range.

What LiDAR Provides

This point cloud forms a highly accurate, 3D map of the environment. LiDAR is excellent at:

- Precise Distance Measurement: Highly accurate distances to objects, crucial for safe navigation and collision avoidance.

- 3D Mapping: Creates a detailed model of the environment, understanding the shape and structure of objects and terrain.

- Working in Low Light: Unlike cameras, LiDAR generates its own light source (lasers), so it functions effectively even in complete darkness.

However, LiDAR isn’t without its challenges. Heavy fog, rain, or snow can interfere with the laser pulses, reducing its effectiveness. Also, high-resolution LiDAR systems have traditionally been quite expensive.

Cameras: Interpreting the Visual World

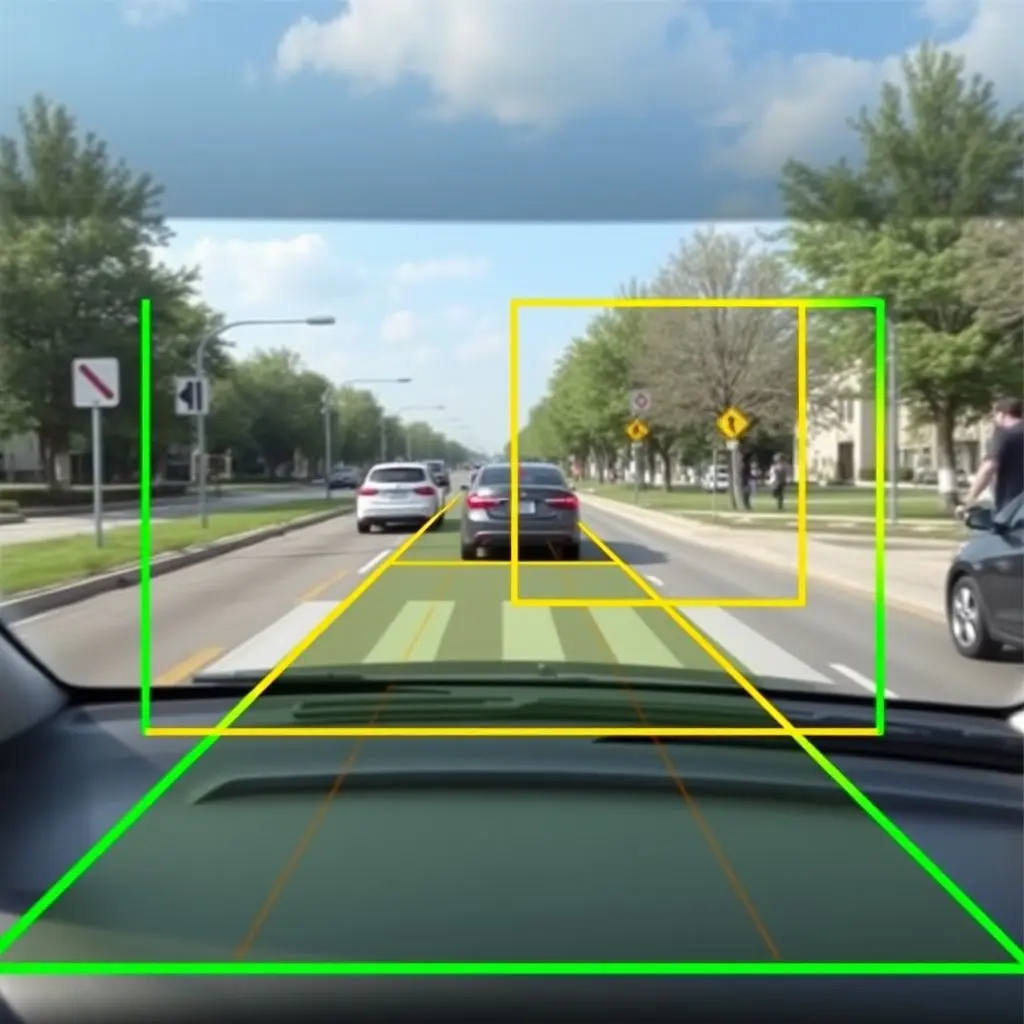

Just like our eyes, cameras are essential for understanding the visual details of the environment. Self-driving cars employ multiple cameras strategically placed around the vehicle to provide a 360-degree view.

What Cameras See

Cameras capture standard 2D images, but with the help of sophisticated computer vision algorithms, they can extract a wealth of information:

- Object Recognition: Identifying and classifying objects (cars, trucks, motorcycles, bicycles, pedestrians, animals, etc.).

- Lane Detection: Recognizing and tracking lane lines and road boundaries.

- Traffic Sign & Light Recognition: Reading text on signs and identifying the state of traffic lights (red, yellow, green).

- Semantic Understanding: Interpreting the meaning of different visual elements (e.g., distinguishing pavement from grass, or sky from buildings).

- Color & Texture: Providing rich detail that LiDAR cannot, like the color of a traffic light or the texture of a road surface.

Cameras are relatively inexpensive and provide the kind of rich visual information that humans use to navigate. However, their performance degrades significantly in challenging lighting conditions (bright sunlight, shadows, low light) and poor weather (heavy rain, fog, snow), and deriving accurate depth information from a single 2D image requires complex processing.

Curious to see a quick breakdown? This short video offers a visual glimpse into these sensor systems:

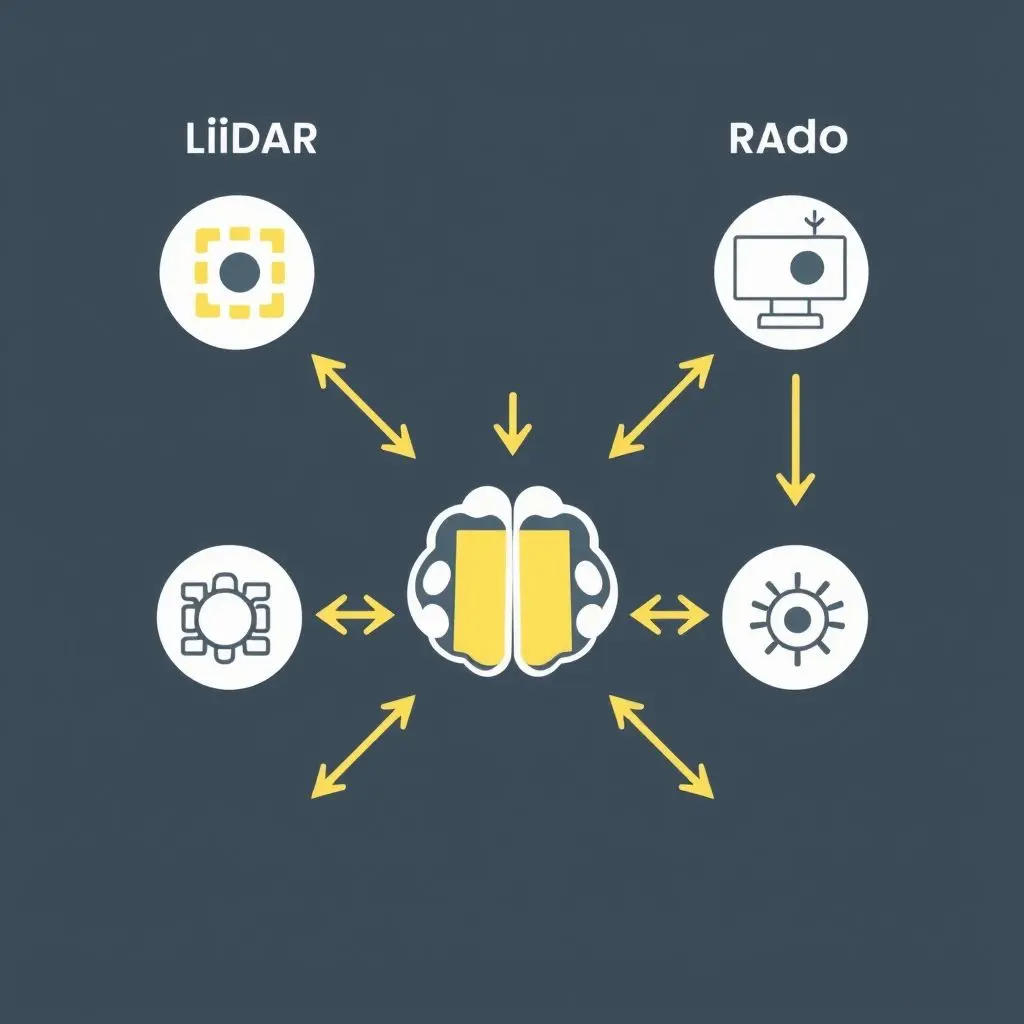

The Brain at Work: Sensor Fusion

The real magic happens when the data from all these different sensors is brought together. This process is called sensor fusion. The car’s onboard computer system (the ‘brain’) takes the accurate 3D mapping data from LiDAR, the detailed visual information from cameras, the velocity data from radar, and combines it all.

Sophisticated algorithms and machine learning models process this fused data to build a comprehensive, constantly updating model of the world around the car. This model allows the car to:

- Identify Objects: Confirming what an object is by cross-referencing LiDAR shape data with camera image recognition.

- Track Movement: Monitoring the position and velocity of all dynamic objects.

- Predict Behavior: Anticipating what other road users (vehicles, pedestrians) might do next based on their current movement and the environment.

This fusion of data allows the car to overcome the limitations of individual sensors. For example, if a camera is temporarily blinded by sunlight, the LiDAR can still provide accurate location data for nearby objects. If LiDAR struggles in fog, cameras might still be able to detect the shape of a vehicle or the color of a traffic light through the haze (albeit with reduced range).

Beyond Sight: Processing the World

Ultimately, self-driving cars don’t ‘see’ in the human sense of conscious visual perception. They process the world through layers of digital input – millions of laser pulses, billions of pixels, radar echoes – transforming raw data into a structured, actionable understanding of the environment. It’s a continuous cycle of sensing, interpreting, deciding, and acting.

While the technology is incredibly advanced, the path to fully autonomous driving still involves significant challenges, including perfecting performance in all weather conditions, navigating complex urban environments, and ensuring safety and reliability in every conceivable scenario. The ongoing development in sensor technology, AI algorithms, and processing power continues to push the boundaries of what’s possible.

Frequently Asked Questions (FAQs)

Q: Is LiDAR better than cameras for self-driving cars?

A: Neither is inherently ‘better’. They provide different, complementary types of information. LiDAR is great for precise 3D mapping and distance, especially in low light. Cameras excel at object recognition, reading signs, and understanding semantic details like color and texture. Most robust self-driving systems use a combination of both (and often radar).

Q: Why do some companies emphasize cameras more than LiDAR?

A: Some companies, like Tesla, have focused heavily on camera-only systems due to the lower cost compared to high-resolution LiDAR and the belief that human-like visual processing is sufficient with advanced AI. However, many in the industry believe that LiDAR, especially as costs decrease, provides valuable redundancy and superior spatial accuracy that enhances safety and performance, particularly in challenging conditions.

Q: Can these sensors see through objects or around corners?

A: Generally, no. LiDAR and cameras rely on a line of sight. They cannot see through solid objects. Radar can penetrate some materials and might detect objects beyond visible sight range (e.g., through fog), but it provides less detail than LiDAR or cameras. Future technologies like ‘seeing around corners’ using reflected light are in research but not yet common in production cars.

Q: What happens if a sensor fails or is obstructed?

A: Redundancy is a key aspect of self-driving system design. Multiple sensors of the same type, along with different sensor modalities (LiDAR, cameras, radar), help ensure that the system can continue to operate safely even if one sensor is partially obstructed or experiences an anomaly. The system is designed to detect sensor failures and either rely on other sensors or safely bring the vehicle to a stop.

Processing the Path Ahead

Understanding how self-driving cars perceive their environment reveals a fascinating interplay of hardware and artificial intelligence. It’s not about giving cars eyes, but about equipping them with digital senses and the computational power to interpret complex data streams, building a sophisticated, dynamic understanding of the road ahead, one data point at a time.