Ever wondered if the majestic giants of the ocean, whales, possess something akin to our own unique speaking voices? Do their haunting, complex songs hold individual fingerprints, much like our distinct vocal cords produce unique tones? It’s a captivating thought, and as it turns out, the answer is a resounding ‘yes’! In a truly groundbreaking leap, Artificial Intelligence is rapidly emerging as the ultimate ‘voiceprint analyst’ for these magnificent marine mammals.

Imagine deploying super-sensitive microphones deep beneath the waves, capturing the incredible, intricate symphonies of whales. AI doesn’t just ‘hear’ these sounds; it visually maps them, meticulously spotting subtle patterns and unique variations specific to a single, individual whale. It’s like facial recognition, but for their acoustic signatures!

To get a quick visual grasp of this groundbreaking technology, dive into our brief video below:

Table of Contents

The Unseen Symphony: Decoding Ocean’s Deepest Voices

For centuries, the vast, echoing calls of whales have captivated humanity. From the low-frequency moans of blue whales that can travel thousands of miles to the complex, repeating phrases of humpback whale ‘songs,’ these vocalizations serve a multitude of purposes: communication, navigation, mating, and even hunting. But within this grand chorus lies an even more astonishing detail: individual whales, much like humans, appear to possess unique vocal characteristics.

While we might distinguish between a humpback’s song and a sperm whale’s clicks, identifying one specific humpback from another by sound alone has been an immense challenge. The ocean is a noisy place, and the sheer volume and complexity of whale vocalizations make human-based individual identification nearly impossible on a large scale.

AI Takes the Helm: Unraveling Acoustic Signatures

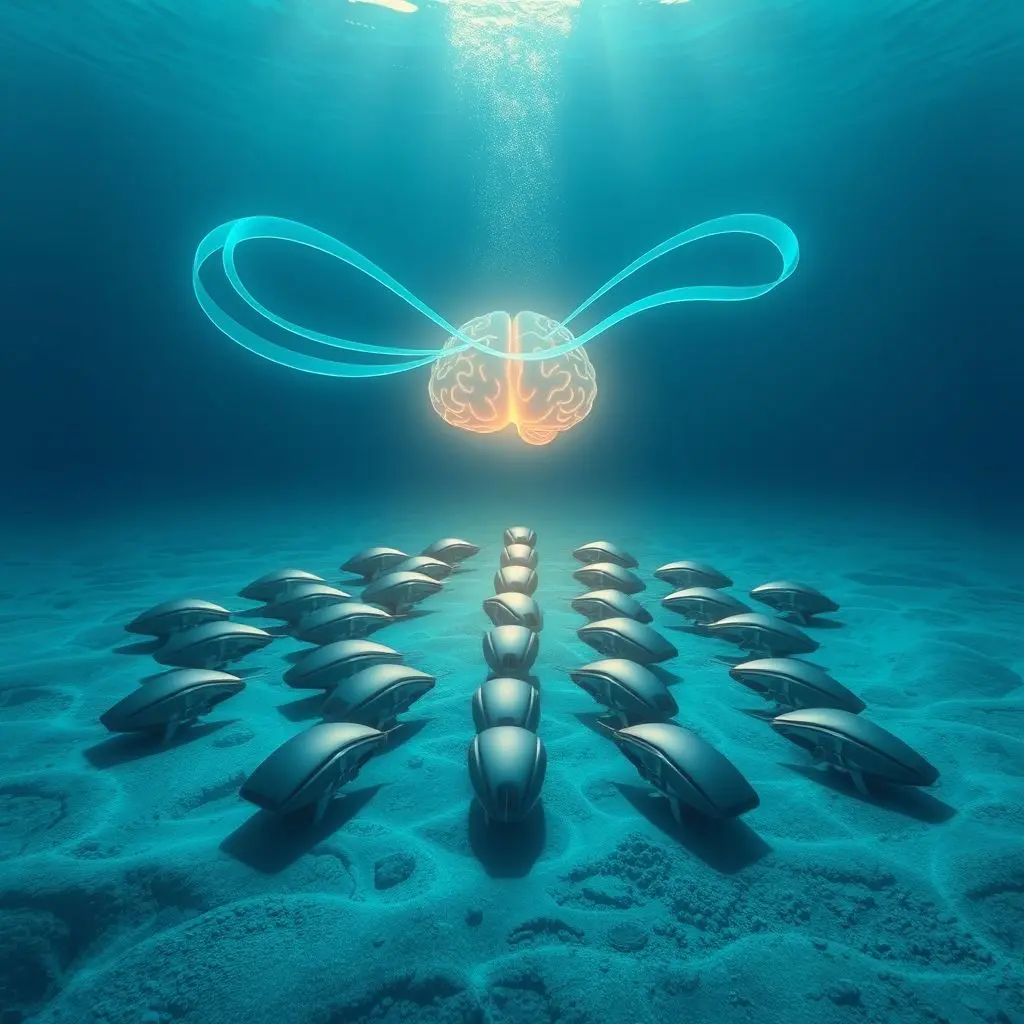

This is where Artificial Intelligence steps in as the ultimate acoustic detective. By harnessing the power of advanced algorithms, researchers are transforming raw underwater sound data into actionable insights, finally enabling the non-invasive identification of individual whales.

How AI Deciphers Whale Voices

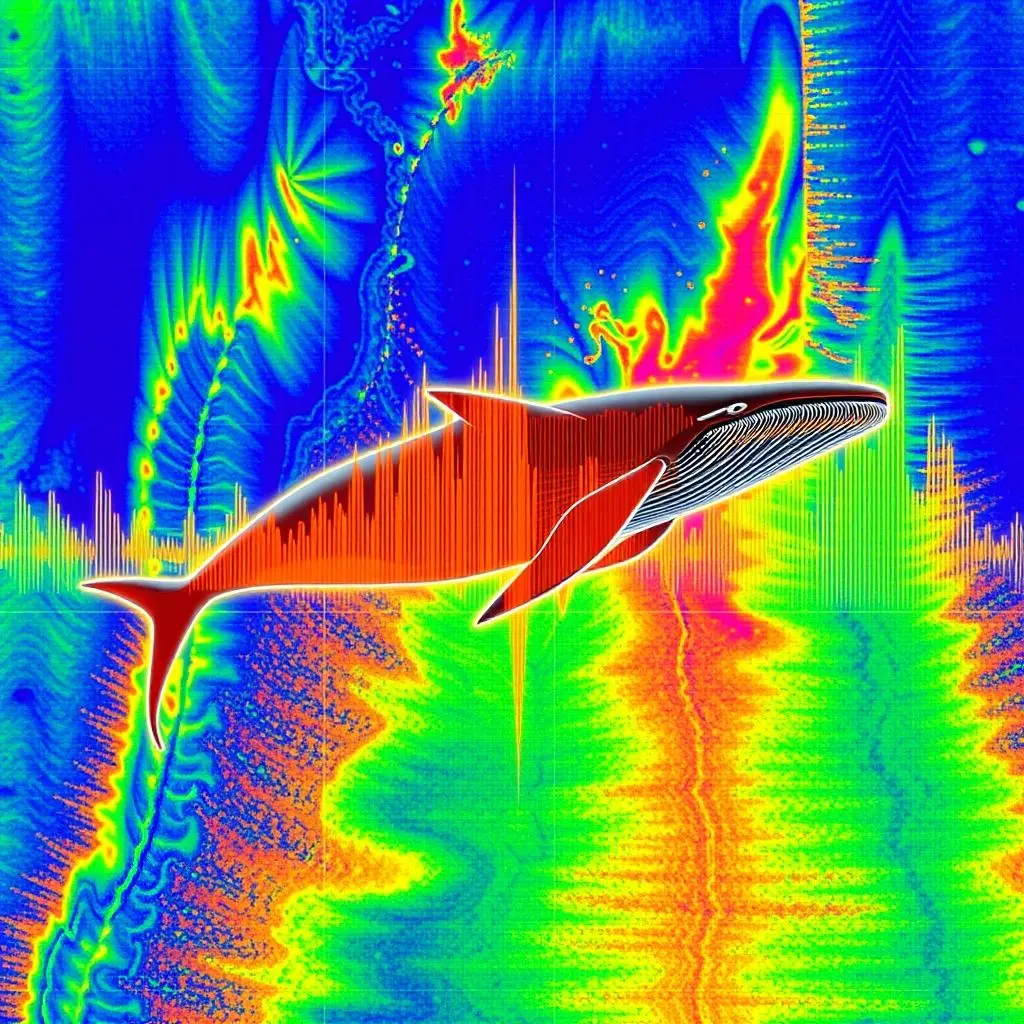

The process begins with the deployment of highly sensitive hydrophones (underwater microphones). These devices capture vast amounts of acoustic data, essentially recording the ‘conversations’ and ‘songs’ of the ocean. This raw audio, however, is just the starting point. The real magic happens when AI transforms this auditory information into a visual format known as a spectrogram.

A spectrogram is a visual representation of sound, mapping frequency (pitch) against time, with intensity often represented by color or brightness. For human eyes, a spectrogram might show a general pattern, but for AI, it reveals a universe of subtle nuances. AI algorithms can scrutinize these spectrograms, spotting minute patterns, unique vocal inflections, and specific variations that are utterly imperceptible to the human ear. These unique features form an individual whale’s acoustic signature.

The Mechanics Behind the Magic: How AI Listens and Learns

- Data Collection: It all starts with vast amounts of high-fidelity audio captured by hydrophone arrays, autonomous underwater vehicles (AUVs), and passive acoustic monitoring (PAM) systems deployed across diverse marine habitats. The sheer volume and consistency of this data are crucial for training robust AI models.

- Pre-processing: Before analysis, raw audio is often cleaned. This involves filtering out background noise (ship engines, seismic surveys, other marine life) and isolating specific whale vocalizations.

- Feature Extraction: This is where AI truly shines. Algorithms are designed to extract critical features from the spectrograms. These features can include pitch contours, rhythmic patterns, harmonic structures, unique frequency modulations, and specific vocal ‘quirks’ that are highly stable and unique to an individual over time.

- Machine Learning Algorithms:

- Supervised Learning: In this approach, AI models are ‘trained’ using labeled datasets. This means feeding the AI spectrograms of known individual whales (identified through traditional methods like photo-ID or genetics) along with their unique identifiers. The AI learns to associate specific acoustic signatures with specific individuals.

- Deep Learning & Neural Networks: Particularly powerful for bioacoustics, deep learning models, especially Convolutional Neural Networks (CNNs) – which excel at image recognition (and spectrograms are essentially images) – and Recurrent Neural Networks (RNNs) – good for sequential data – can automatically discover complex, hierarchical patterns within the acoustic data without explicit feature engineering. They can learn to differentiate even the most subtle variations in whale vocalizations.

- Pattern Matching & Identification: Once trained, the AI model can analyze new, unlabelled whale vocalizations. It compares these against its vast database of learned acoustic signatures, identifying a match (or a close match) to an individual whale with a high degree of probability.

A Tidal Wave of Benefits for Marine Conservation

This isn’t just fascinating technology; it’s genuinely revolutionary for marine conservation. The ability to identify individual whales non-invasively, purely through their vocalizations, unlocks unprecedented opportunities for understanding and protecting these vital species.

- Precision Tracking of Individuals: Knowing ‘who’ is ‘where’ at any given time is invaluable. This allows researchers to study individual life histories, track reproductive success, monitor health status, and even identify specific whales that might be at higher risk from human activities like ship strikes or entanglement.

- Accurate Population Estimates: For many elusive or deep-diving whale species, visual surveys are impractical or inaccurate. AI-driven acoustic identification offers a robust, continuous method for counting individuals and estimating population sizes, providing crucial data for conservation status assessments and effective management strategies.

- Unveiling Migration Routes and Habitat Use: By continuously monitoring acoustic signatures across vast ocean expanses, scientists can map migration routes and understand preferred feeding, breeding, and resting grounds with unprecedented detail. This information is critical for designating and managing marine protected areas.

- Deciphering Social Structures and Behavior: AI can help decode the intricate social dynamics within whale populations. It can identify family groups, track social bonds, understand communication networks, and even detect unique ‘dialects’ or group-specific vocalizations, offering profound insights into their complex lives.

- Non-Invasive Monitoring: Perhaps one of the most significant advantages is the non-invasive nature of this technology. Unlike tagging or biopsy sampling, acoustic monitoring doesn’t disturb the whales, ensuring their natural behavior remains unaltered while valuable data is collected.

![]()

Navigating the Depths: Challenges and Future Horizons

While immensely promising, the widespread application of AI for whale identification still faces certain challenges:

- Data Volume and Quality: Processing petabytes of raw acoustic data, often plagued by ambient ocean noise or varying recording conditions, requires sophisticated filtering and robust computational infrastructure.

- Computational Resources: Training and deploying complex deep learning models demand significant computational power, often requiring cloud-based solutions or high-performance computing clusters.

- Ground Truthing: Initial validation of AI identifications often requires ‘ground truthing’ – cross-referencing acoustic identifications with visual sightings, photo-ID catalogues, or genetic samples to ensure accuracy. This can be resource-intensive.

- Algorithm Sophistication: Continuous refinement of algorithms is necessary to improve accuracy, reduce false positives, and handle the vast diversity and variability of whale vocalizations across different species and environments.

Despite these hurdles, the future is incredibly bright. We can anticipate real-time monitoring systems that provide early warnings of whale presence in shipping lanes, mitigating collision risks. Integrated with other sensor data, AI will paint an even richer picture of marine ecosystems, allowing for more dynamic and responsive conservation strategies. The ethical implications of persistent tracking, even non-invasive, will also be an important area of discussion as the technology evolves.

The Echoes of Tomorrow

The convergence of AI and bioacoustics is opening up a fascinating new chapter in marine biology and conservation. By enabling us to ‘listen’ to the ocean in an entirely new way, AI is helping us understand the lives of whales with unprecedented depth and precision. Beneath the waves, a complex art thrives, and with AI’s keen ear, we are now beginning to truly know each heart. This technology promises not just to satisfy our curiosity but to equip us with powerful tools to protect these magnificent creatures for generations to come.

Frequently Asked Questions (FAQs)

Q1: What types of whales can AI identify using their songs?

AI is showing great promise across various baleen whale species known for their complex songs, such as humpback whales, blue whales, and fin whales. For toothed whales like sperm whales, AI can identify individuals based on unique click patterns. The effectiveness can vary by species, depending on the distinctiveness of their vocalizations and the availability of sufficient training data.

Q2: How accurate is AI in identifying individual whales?

The accuracy varies depending on the species, the quality and volume of training data, and the sophistication of the AI model. Research is continually improving accuracy, with some studies reporting high success rates (e.g., over 90%) for identifying specific individuals within well-studied populations. However, it’s an evolving field, and achieving 100% accuracy in real-world, noisy environments remains a challenge.

Q3: Is this technology expensive or widely available?

While the initial setup for large-scale acoustic monitoring (hydrophone arrays, data storage) can be costly, the AI software itself is becoming more accessible. Researchers worldwide are developing open-source tools and leveraging cloud computing to make these powerful analytical methods more widely available. However, deploying comprehensive systems still requires significant funding and expertise.

Q4: How does AI-based identification compare to traditional whale tracking methods?

Traditional methods include photo-identification (matching unique markings), genetic sampling (biopsy darts), and satellite tagging. AI-based acoustic identification offers a significant advantage by being completely non-invasive and capable of continuous, long-term monitoring over vast areas without needing to physically interact with the whales. It complements, rather than replaces, traditional methods, often providing new insights that other techniques cannot.

Q5: Can AI help protect whales from human activities like ship strikes or entanglement?

Absolutely. By identifying individual whales and tracking their presence in near real-time, AI can contribute to early warning systems for mariners, helping ships avoid collision with whales. It can also inform dynamic ocean management strategies, such as temporary speed restrictions or rerouting of shipping lanes in areas where specific at-risk individuals or high-density whale populations are detected acoustically.