Have you ever scrolled online and paused, wondering if that striking image, that unique piece of digital art, or even that eerily realistic human face was actually created by a computer? If you have, you’ve likely encountered the work of something truly revolutionary in the world of artificial intelligence: a Generative Adversarial Network, or GAN.

It sounds complex, but at its core, a GAN is driven by a fascinating dynamic – a sort of digital rivalry that pushes AI to unprecedented levels of creativity and realism. Imagine two distinct AI models locked in a continuous game of one-upmanship, each trying to outperform the other. One, the creative force, is dedicated to crafting convincing fakes. The other, a stern critic, is solely focused on spotting those fakes.

It’s this back-and-forth, this constant adversarial process, that enables GANs to generate entirely novel data that is often astonishingly indistinguishable from the real thing. It’s how machines learn not just to replicate, but to *imagine* new possibilities.

Speaking of imagining, here’s a quick visual byte that breaks down the core idea of GANs:

Table of Contents

Diving Deeper: The GAN Framework

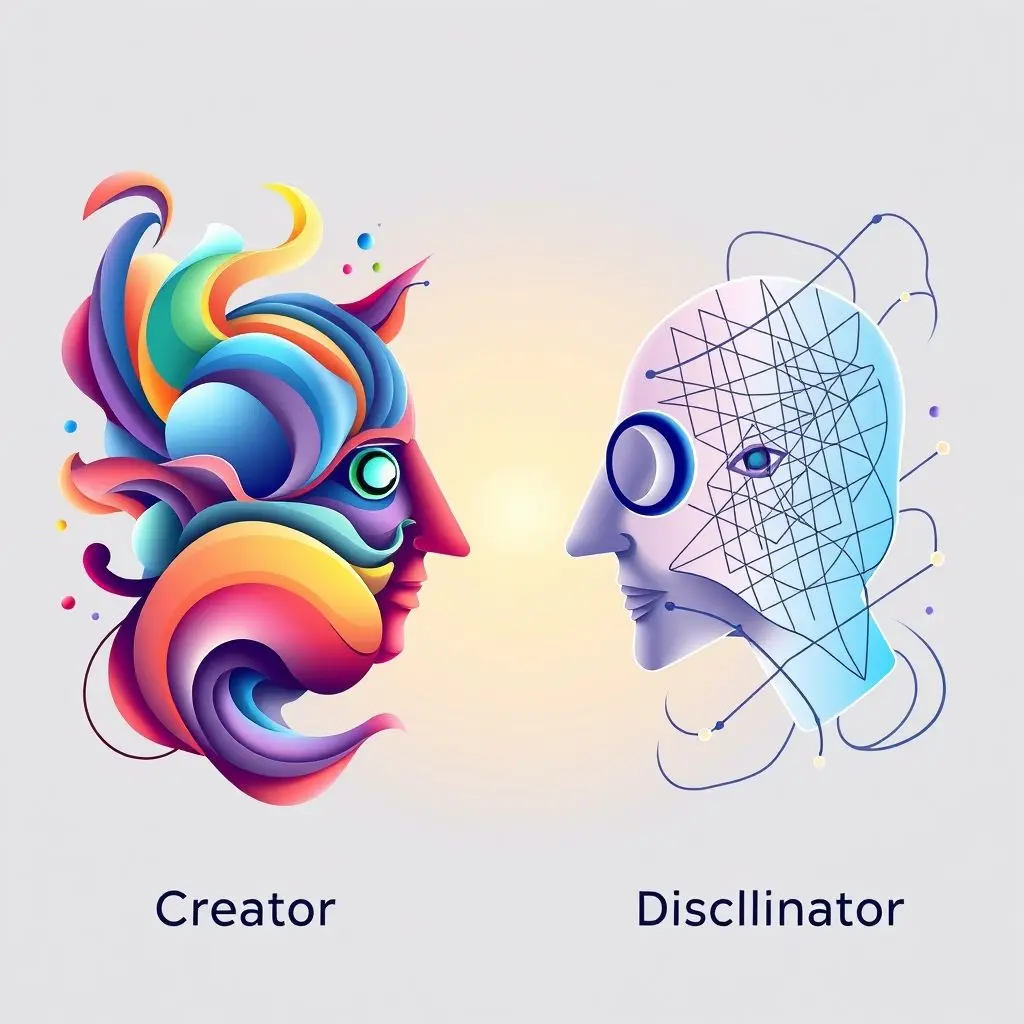

So, how does this digital rivalry actually work? A Generative Adversarial Network isn’t a single neural network, but rather a system comprising two distinct neural networks:

- The Generator: This network’s job is to learn the underlying data distribution of the real examples it’s being shown (indirectly) and generate new data points that mimic this distribution. Think of it as the aspiring artist, trying to produce works that look genuine. It starts by generating random noise and learns to transform this noise into structured data like images, audio, or text.

- The Discriminator: This network acts as the expert critic or detective. It’s trained to distinguish between real data (from the training dataset) and fake data (produced by the Generator). It takes an input – either a real data point or a generated data point – and outputs a probability that the input is real.

The two networks are trained simultaneously in a zero-sum game setting. The Generator’s goal is to maximize the probability of the Discriminator making a mistake (i.e., classifying generated data as real). The Discriminator’s goal is to minimize this probability (i.e., correctly classifying data as either real or fake).

Let’s visualize this:

The Training Dance: An Adversarial Loop

The real magic happens in the training process. It’s an iterative loop of challenge and improvement:

- Generator Creates: The Generator takes a random noise vector as input and transforms it into a synthetic data sample (e.g., a fake image).

- Discriminator Evaluates: The Discriminator is then presented with a mix of real data samples (from the training set) and the fake data samples generated by the Generator.

- Discriminator Learns: The Discriminator attempts to classify each sample as either ‘real’ or ‘fake’. It receives feedback on its performance (its ‘loss’) and updates its internal parameters (weights and biases) via backpropagation to get better at this classification task.

- Generator Learns: Simultaneously, the Generator receives feedback based on how well it managed to fool the Discriminator. The gradients from the Discriminator’s loss are backpropagated *through* the Discriminator to the Generator. This tells the Generator how to adjust its own parameters so that the *next* fake data it generates will be more likely to be classified as ‘real’ by the Discriminator.

This process repeats over many training epochs. The Generator continuously hones its ability to produce data that looks real, while the Discriminator continually improves its ability to detect the subtle differences between real and fake. Ideally, the training converges when the Generator produces data that is so realistic that the Discriminator can no longer distinguish it from real data, essentially guessing with 50% accuracy.

Why GANs Stand Out for Generation

What makes GANs particularly effective for generating highly realistic and complex data, especially images? Their adversarial nature forces the Generator to learn the intricate details and variations present in the real data distribution to a very high degree. Unlike some other generative models that might blur details or produce less sharp outputs, the direct competition in GANs drives the Generator towards producing crisp, convincing outputs that capture the perceptual characteristics of the real data.

The results can be quite stunning, like generating faces of people who don’t exist or creating hyper-realistic textures.

Fascinating Applications of GANs

The power of GANs extends far beyond just generating fake faces. Their ability to synthesize realistic data has led to a wide range of practical and creative applications:

- Realistic Image Synthesis: Creating photorealistic images of scenes, objects, and people that are entirely synthetic.

- Image-to-Image Translation: Transforming images from one domain to another, such as changing seasons (summer to winter), applying artistic styles, turning sketches into photorealistic images, or converting satellite images to maps.

- Text-to-Image Synthesis: Although often associated with newer diffusion models, early breakthroughs and ongoing research using GANs contribute to generating images from textual descriptions.

- Data Augmentation: Generating synthetic training data to expand limited datasets for training other machine learning models, particularly useful in fields like medical imaging.

- Super-resolution: Enhancing the resolution of low-resolution images by generating plausible high-resolution details.

- Novel Drug Discovery: Generating molecular structures with desired properties (a more advanced application).

- Generating Music and Text: While less common than image generation, GANs have been explored for generating sequential data like music compositions and text.

Challenges Along the Way

Despite their incredible capabilities, training GANs isn’t always straightforward. They are known for being notoriously difficult to train stably. Some common issues include:

- Mode Collapse: The Generator might get stuck producing only a very limited variety of outputs that can fool the current Discriminator, failing to capture the full diversity of the real data distribution.

- Non-convergence: The training process might oscillate or never truly reach a stable equilibrium where both networks are optimized.

- Evaluation Difficulty: Quantitatively evaluating the quality and diversity of generated samples remains a challenge, often requiring subjective human evaluation.

- Computational Cost: Training can be computationally intensive, requiring significant hardware resources and time.

Furthermore, the power of GANs to create convincing fakes raises ethical concerns, particularly regarding the potential misuse for creating deepfakes and spreading misinformation.

Frequently Asked Questions About GANs

Are GANs a type of supervised or unsupervised learning?

GANs are generally considered an unsupervised learning approach because they learn to generate data that matches the distribution of a given dataset without needing explicit, human-labeled input-output pairs for the generation itself. While the Discriminator is trained using labels (‘real’ or ‘fake’), the overall framework aims to model the inherent structure of the data.

What is mode collapse in GANs?

Mode collapse is a common failure mode during GAN training where the Generator produces samples with very little variety. Instead of generating diverse outputs that cover the entire distribution of the real data, it focuses on generating only a few types of samples that are good at fooling the current Discriminator, but fail to represent the full range of possibilities in the dataset.

How do GANs differ from VAEs (Variational Autoencoders)?

Both GANs and VAEs are generative models, but they work differently. VAEs use an encoding-decoding structure and rely on variational inference to learn a smooth latent representation of the data, making them easier to sample from and less prone to mode collapse. However, their outputs can sometimes be blurry. GANs, through their adversarial training, tend to produce sharper and more perceptually realistic outputs, but are harder to train and manage.

Can GANs generate more than just images?

Absolutely! While image generation is the most prominent and successful application, GANs can be adapted to generate other types of data, including text, music, time series data, and even molecular structures, by modifying the architecture of the Generator and Discriminator networks to handle the specific data format.

Where AI Learns to Dream

Generative Adversarial Networks represent a significant leap forward in the field of artificial intelligence, pushing the boundaries of what machines can create. By pitting two networks against each other in a creative arms race, GANs have unlocked the ability to synthesize remarkably realistic and novel data, transforming fields from digital art and entertainment to science and healthcare.

While challenges in training stability and ethical considerations persist, the fundamental concept of adversarial learning continues to inspire new architectures and applications in the broader landscape of generative AI. GANs have truly taught AI how to dream up possibilities that never existed before, proving that sometimes, a brilliant imitation can lead to entirely new forms of reality.

Liked this deep dive into GANs? We’re generating more awesome tech content every week! Don’t be a discriminator – show your support by hitting that like button and subscribing to our channel and blog for more insights!