For the longest time, when we pictured robots, we often saw clunky machines performing repetitive, pre-programmed tasks on an assembly line. Think of them as highly efficient tools, but fundamentally lacking the spark of independent thought or the ability to handle anything unexpected. They were masters of automation, yes, but strictly within the confines of their coded instructions.

But the world of robotics is undergoing a profound transformation, and the engine driving this change is Artificial Intelligence (AI). We’re witnessing a leap that takes robots far beyond mere automation, evolving them into truly intelligent systems. These aren’t just machines following commands; they are becoming entities that can learn from their environment, engage naturally with humans and other robots, adapt on the fly to unforeseen circumstances, and even tackle complex decision-making in highly unpredictable scenarios.

Rather than remaining static instruments, they are stepping into roles as dynamic collaborators, grasping context and working seamlessly alongside their human counterparts. It’s a shift from fixed programming to fluid intelligence, where AI provides the ‘brains’ that allow robots to think, perceive, and interact in ways previously confined to science fiction. From the foundational bolts and intricate code, AI is powering a future where robots don’t just exist but thrive and contribute side-by-side with us.

Curious to see a quick visual representation of this incredible evolution? We put together a short video highlighting this very transition:

Table of Contents

From Automated Arms to Cognitive Companions

The traditional robot was a marvel of engineering precision, excelling at specific, repeatable tasks. Spot welding on a car frame, picking and placing items on a conveyor belt, painting components – these are the hallmarks of classical industrial automation. These robots operate in highly structured environments where variables are minimized and predictable. They are fast, accurate, and tireless within their defined parameters.

However, their intelligence was limited to executing pre-set programs. Introduce an unexpected object, a slightly misplaced component, or a human walking into their path, and they would often halt or fail. They lacked the perception, understanding, and flexibility to handle novelty or uncertainty. They didn’t *understand* their task; they merely *performed* it based on explicit instructions.

AI changes this fundamental equation. By integrating capabilities like machine learning, computer vision, natural language processing, and complex planning algorithms, robots gain a level of cognitive function. They move from being purely *automated* to becoming genuinely *intelligent*.

Key AI Enablers of Robotic Intelligence

What specific AI technologies are fueling this transformation? Let’s delve into the core capabilities:

Machine Learning and Deep Learning

At its heart, AI allows robots to learn. Instead of being explicitly programmed for every conceivable scenario, machine learning (ML) algorithms enable robots to learn from data and experience. Deep Learning, a subset of ML using neural networks, is particularly powerful for processing complex sensory data. This means a robot can learn to identify objects, recognize patterns, refine its movements based on feedback, or predict outcomes without a human writing specific code for each permutation.

For example, a robotic arm in a warehouse equipped with ML can learn to grasp objects of various shapes and sizes it has never encountered before, simply by being trained on a dataset of successful grasps. Or a mobile robot can learn to navigate a cluttered office space by observing human paths and avoiding obstacles it previously collided with.

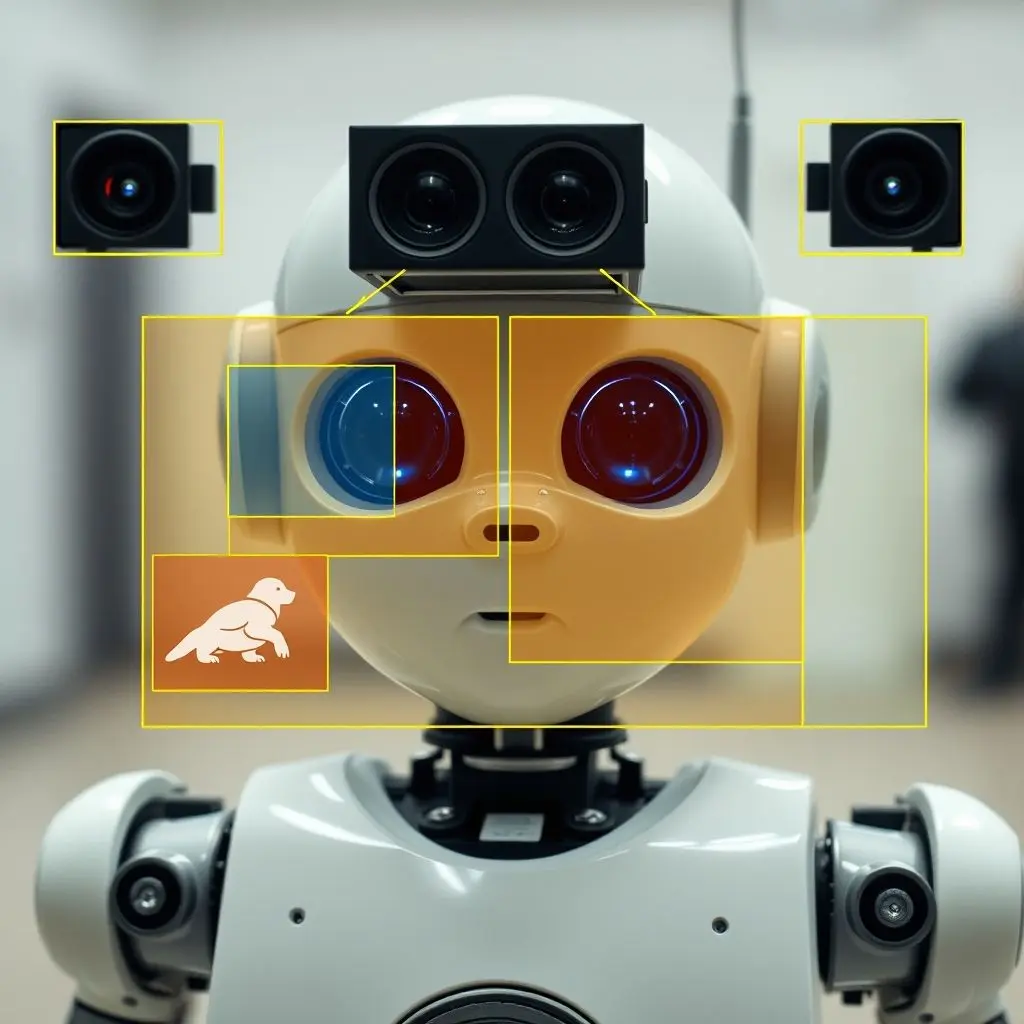

Computer Vision

Giving robots the ability to ‘see’ and interpret their surroundings is crucial. Computer vision techniques, often powered by deep learning, allow robots to identify objects, track movement, recognize faces, understand scenes, and even gauge distances and spatial relationships. This enables robots to work in dynamic environments, locate items, inspect products for defects, or navigate complex terrain safely.

Think of an autonomous delivery robot navigating city streets. It uses computer vision to detect pedestrians, cyclists, vehicles, traffic lights, and unexpected obstacles like potholes or construction zones, making real-time decisions based on what it ‘sees’.

Natural Language Processing (NLP)

To collaborate effectively with humans, robots need to understand our language. NLP allows robots to process and interpret spoken or written human commands and requests. While still evolving, this capability is vital for service robots, collaborative industrial robots, or even advanced home assistants that need to respond to complex instructions or engage in limited dialogue.

A robot assistant in a hospital might use NLP to understand a nurse’s request to fetch specific supplies or a collaborative robot on a factory floor might respond to voice commands from a technician.

Path Planning and Navigation

Moving intelligently isn’t just about following a pre-set map. AI-powered path planning allows robots to find the most efficient or safest routes in complex, changing environments, often in real-time. This involves mapping the environment (SLAM – Simultaneous Localization and Mapping), avoiding obstacles, and predicting potential future obstacles or changes.

Autonomous vehicles are prime examples, constantly planning and replanning paths based on traffic conditions, pedestrian movements, and road closures. Similarly, warehouse robots dynamically adjust routes to avoid collisions and optimize delivery times.

Decision Making and Reasoning

Perhaps the most significant leap is the ability for robots to make decisions in uncertain situations. AI algorithms, including reinforcement learning and probabilistic reasoning, allow robots to weigh different options, assess risks, and choose actions that maximize a desired outcome, even when information is incomplete or the environment is unpredictable.

This is critical for tasks like autonomous exploration (e.g., a Mars rover deciding how to navigate rocky terrain), complex manipulation (e.g., picking a delicate item from a jumbled pile), or even strategic gameplay against human opponents.

Robots as True Collaborators

The integration of AI is fundamentally changing the relationship between humans and robots. We’re moving away from robots as isolated, caged-off machines that humans avoid, towards robots that work side-by-side with people – known as Human-Robot Interaction (HRI) and collaborative robotics (cobots).

AI enables robots to perceive human presence, understand gestures (to some extent), recognize intention from movement, and even adapt their speed and path to ensure human safety. They can learn workflows by observing humans or be trained through demonstration rather than explicit programming. This opens up possibilities for robots to assist in tasks that require both robotic strength/precision and human dexterity/cognitive insight.

Furthermore, AI facilitates multi-robot coordination. Fleets of robots in a warehouse or swarm robots for environmental monitoring can communicate, share information, and coordinate their actions intelligently to achieve a common goal more efficiently than working in isolation.

Impact Across Industries

This shift is not confined to research labs; it’s actively reshaping various sectors:

- Manufacturing: Flexible assembly lines using cobots, AI-powered quality inspection, predictive maintenance on robotic systems.

- Healthcare: Surgical robots with enhanced autonomy, AI-assisted diagnosis via robotic imaging, automated dispensing and logistics within hospitals, robotic patient care aids.

- Logistics and Warehousing: Autonomous forklifts and sorting robots, dynamic route optimization for delivery, robots handling complex and varied packages.

- Agriculture: Autonomous tractors, robots for precision weeding, selective harvesting of delicate crops based on ripeness determined by computer vision.

- Exploration: More autonomous rovers and underwater vehicles capable of making scientific decisions and navigating unknown territories.

- Service Sector: AI-powered robotic assistants in retail, hospitality, and even domestic environments, capable of interacting with customers and performing varied tasks.

Challenges and Considerations

While the progress is astounding, it’s important to acknowledge that these intelligent systems still face significant hurdles. Reliability in highly unstructured environments remains a challenge. Ensuring the safety of robots working in close proximity to humans is paramount and requires sophisticated sensing and decision-making capabilities. There are also ethical considerations surrounding job displacement as robots become more capable, the potential for bias in AI systems used by robots, and the need for clear legal and regulatory frameworks.

Building trust in autonomous systems is also key. Humans need to understand and predict robot behavior, especially in collaborative settings. This requires designing robots with clear communication interfaces and predictable decision-making processes.

The Horizon Ahead

Looking forward, the integration of AI into robotics promises systems that are even more adaptable, perceptive, and collaborative. We can anticipate robots with enhanced dexterity, better understanding of complex social cues, improved ability to learn from minimal examples, and greater autonomy in navigating and manipulating highly complex, dynamic spaces.

The boundary between robot and tool is dissolving. What emerges are intelligent agents capable of perceiving, reasoning, and acting in ways that augment human capabilities and tackle problems previously considered too complex for automation alone. The future isn’t just about robots doing tasks *for* us; it’s increasingly about robots working *with* us, powered by the ever-evolving capabilities of Artificial Intelligence.

Frequently Asked Questions about AI in Robotics

Q1: What’s the main difference between traditional automation robots and AI-powered robots?

Traditional automation robots are programmed to perform specific, repetitive tasks in controlled environments. They follow explicit instructions and cannot adapt to unexpected changes. AI-powered robots, on the other hand, use AI (like machine learning and computer vision) to learn, perceive their environment, make decisions, and adapt to novel situations, allowing them to handle complexity and uncertainty.

Q2: Are AI robots going to take all human jobs?

While AI in robotics will automate some tasks currently performed by humans, history suggests technology often changes the nature of work rather than eliminating it entirely. AI robots are also enabling new jobs in robot maintenance, programming, supervision, and human-robot team coordination. The focus is shifting towards collaboration, where robots handle repetitive or dangerous tasks, allowing humans to focus on work requiring creativity, critical thinking, and complex problem-solving.

Q3: How do AI robots learn?

AI robots can learn in several ways. Machine learning allows them to identify patterns and make predictions from large datasets. Reinforcement learning enables them to learn through trial and error, receiving rewards for desired behaviors. They can also learn through imitation, observing human actions and attempting to replicate them, or through simulation, practicing tasks in a virtual environment before attempting them in the real world.

Q4: Is Human-Robot Interaction (HRI) safe with AI-powered robots?

Safety is a primary focus in the development of collaborative AI robots (cobots). They are designed with advanced sensors and AI algorithms that allow them to detect human presence, predict movements, and react instantly (e.g., slowing down or stopping) to avoid collisions. Strict safety standards and protocols govern their deployment in shared workspaces.

Q5: What are some examples of AI robots used today?

Examples include autonomous guided vehicles (AGVs) and autonomous mobile robots (AMRs) in warehouses, surgical robots assisting doctors, AI-powered quality inspection robots on production lines, autonomous drones for inspection and delivery, and early forms of service robots in hospitality or elder care.

The journey from simple automation to intelligent collaboration is an exciting one. As AI continues its rapid advancement, its integration with robotics will undoubtedly yield systems of increasing capability and sophistication, redefining industries and potentially reshaping aspects of our daily lives.