Imagine peeling back the layers of every app you use, every website you visit, the very fabric of the digital world we inhabit. What if you could see the fundamental building blocks laid bare? While that might sound like the premise of a digital ‘what if’ scenario, understanding the incredible journey of computing power doesn’t require tearing down firewalls. It requires understanding a principle that has quietly, consistently, and exponentially shaped our technological landscape for decades: Moore’s Law.

Table of Contents

So, What Exactly is Moore’s Law?

First articulated by Gordon Moore, co-founder of Intel, in a 1965 paper, his initial observation was that the number of components on an integrated circuit (a microchip) seemed to be doubling roughly every year, while the cost per component was decreasing. A decade later, he revised the timescale to approximately every two years. This latter interpretation is what most people refer to today when discussing Moore’s Law: the prediction that the number of transistors on a microchip doubles approximately every two years, leading to increased processing power and efficiency, often accompanied by a reduction in cost for the same level of performance.

Think of it like real estate on a tiny silicon city. Moore’s Law posits that you can cram twice as many buildings (transistors) onto the same plot of land (chip area) every couple of years. More buildings mean more activity, more work getting done in the same space.

Seeing is Believing: Visualizing Exponential Growth

While the law is expressed in numbers and timeframes, its impact is best understood visually. How do you *see* a doubling of components every two years? It’s not always about seeing the chip itself, but the *consequences* of that exponential growth.

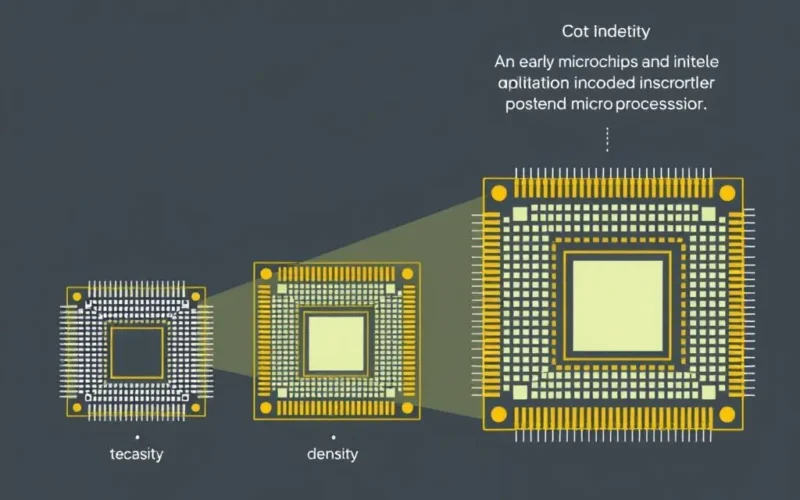

Consider the early integrated circuits compared to modern ones.

An early chip from the 1960s might have contained a few dozen transistors. Today’s advanced microprocessors can house tens of billions of transistors. If you were to look at them under a microscope (and had some way to compare the density over time), you would literally see the components getting smaller and packed tighter together on the same size silicon die.

Another way to visualize this is through the sheer capability of devices over time. The computer that guided the Apollo 11 mission to the moon had less processing power than a modern smartphone or even many simple children’s toys today. This isn’t because we suddenly invented a magically faster transistor; it’s because we’ve been able to put exponentially *more* of them together, working in parallel, on tiny chips.

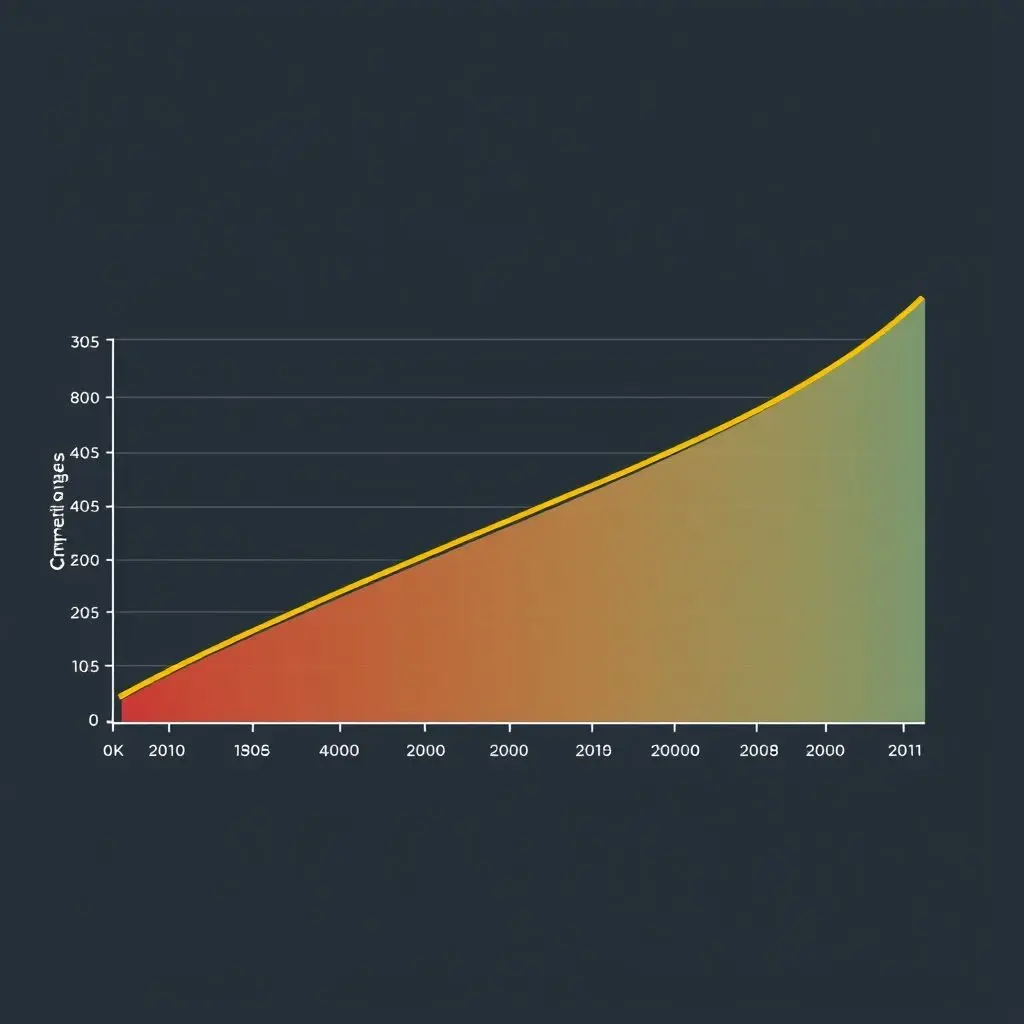

The graph of transistor count on chips over the decades isn’t a straight line; it’s a steep, upward curve, characteristic of exponential growth. This visual representation is key to understanding why technology doesn’t just get better; it gets *dramatically* better at an accelerating pace.

The Engine Driving Innovation

Why does this continuous doubling matter? Because transistors are the fundamental switches that perform calculations in all digital devices. More transistors mean:

- More Processing Power: Complex tasks can be performed faster.

- Increased Efficiency: Tasks can be done using less energy.

- Smaller Size: The same power can fit into smaller devices.

- Lower Cost (per unit of performance): While manufacturing plants (fabs) are incredibly expensive, the cost to produce a single transistor on a chip has plummeted over time.

This relentless progress fueled the personal computer revolution, the internet boom, the rise of mobile computing, artificial intelligence, and countless other technological advancements. It’s the silent engine that has made powerful, pocket-sized supercomputers (our smartphones) ubiquitous.

Hitting the Limits? The Future of Scaling

For decades, engineers have faced and overcome incredible physics challenges to keep Moore’s Law going. Making transistors smaller requires extreme precision, new materials, and innovative manufacturing techniques. However, as components approach atomic scale, the challenges become immense. Quantum tunneling, heat dissipation, and the sheer economic cost of building ever-more-advanced fabs are significant hurdles.

While the *rate* of doubling might be slowing down for traditional silicon transistors, the spirit of Moore’s Law lives on through other innovations. This includes developing new chip architectures (like multi-core processors, specialized AI chips), advanced packaging techniques (stacking chips vertically), and exploring entirely new computing paradigms (like quantum computing or using different materials). So, while the specific prediction about transistor density on a silicon chip might eventually plateau, the overall drive for exponential improvements in computing capability continues, albeit perhaps through different means.

It’s a fascinating period where the physical limits of current technology are pushing the boundaries of scientific research and engineering ingenuity, promising even more mind-bending advancements in the years to come.

More Than Just Chips

The *idea* behind Moore’s Law—exponential improvement driven by technological advancement and economic incentives—has also been observed in other areas, sometimes referred to as similar ‘laws’. Kryder’s Law describes the exponential increase in hard drive storage capacity, and Butter’s Law (of Photonics) describes the exponential increase in data transmission rates in fiber optics. These illustrate a broader pattern of accelerating returns in various technology sectors.

Understanding Moore’s Law isn’t just about appreciating the history of microchips; it’s about grasping the fundamental force that has propelled the digital age from clunky mainframes to the interconnected, intelligent devices we use every second of every day. It’s a story of incredible human ingenuity pushing the boundaries of physics and engineering, a story that continues to unfold.

Looking Ahead

As we navigate the complexities and possibilities of the modern digital world, from AI assistants to virtual realities, remembering the principle of Moore’s Law helps contextualize the speed at which these technologies have arrived and continue to evolve. It’s a powerful reminder that the future of computing, though facing new challenges, is likely to remain a journey of extraordinary, perhaps even exponential, progress.

What are your thoughts on the future of computing power?